LA BIBLIO DE L’AJAR: La rentrée 2021

L’été s’est un peu éternisé au sein de la communauté!

On vous a réuni la crème de la crème de ces derniers mois, de grosses études à ne pas manquer, des recos, des reviews et même une vidéo; enjoy il y en a pour tous les goûts 🤓

La team biblio de l’AJAR Paris.

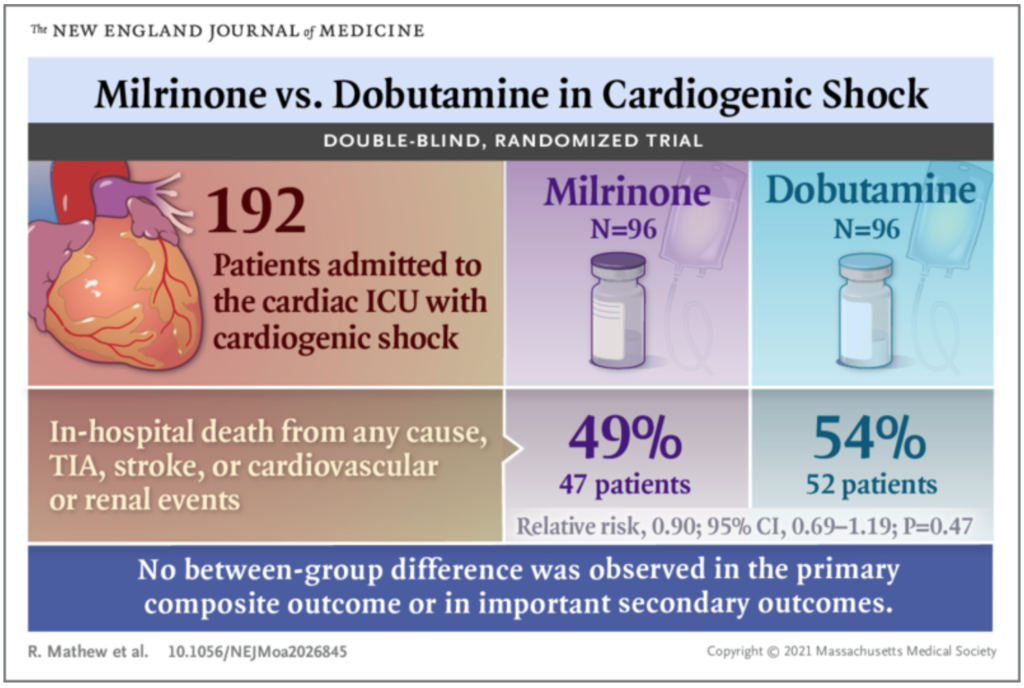

✦ Milrinone as Compared with Dobutamine in the Treatment of Cardiogenic Shock

DOI: 10.1056/NEJMoa2026845

BACKGROUND

Cardiogenic shock is associated with substantial morbidity and mortality. Although inotropic support is a mainstay of medical therapy for cardiogenic shock, little evidence exists to guide the selection of inotropic agents in clinical practice.

METHODS

We randomly assigned patients with cardiogenic shock to receive milrinone or dobutamine in a double-blind fashion. The primary outcome was a composite of in-hospital death from any cause, resuscitated cardiac arrest, receipt of a cardiac transplant or mechanical circulatory support, nonfatal myocardial infarction, transient ischemic attack or stroke diagnosed by a neurologist, or initiation of renal replacement therapy. Secondary outcomes included the individual components of the primary composite outcome.

RESULTS

A total of 192 participants (96 in each group) were enrolled. The treatment groups did not differ significantly with respect to the primary outcome; a primary outcome event occurred in 47 participants (49%) in the milrinone group and in 52 participants (54%) in the dobutamine group (relative risk, 0.90; 95% confidence interval [CI], 0.69 to 1.19; P=0.47). There were also no significant differences between the groups with respect to secondary outcomes, including in-hospital death (37% and 43% of the participants, respectively; relative risk, 0.85; 95% CI, 0.60 to 1.21), resuscitated cardiac arrest (7% and 9%; hazard ratio, 0.78; 95% CI, 0.29 to 2.07), receipt of mechanical circulatory support (12% and 15%; hazard ratio, 0.78; 95% CI, 0.36 to 1.71), or initiation of renal replacement therapy (22% and 17%; hazard ratio, 1.39; 95% CI, 0.73 to 2.67).

CONCLUSIONS

In patients with cardiogenic shock, no significant difference between milrinone and dobutamine was found with respect to the primary composite outcome or important secondary outcomes. (Funded by the Innovation Fund of the Alternative Funding Plan for the Academic Health Sciences Centres of Ontario; ClinicalTrials.gov number, NCT03207165. opens in new tab.)

✦ Therapeutic Anticoagulation with Heparin in Critically Ill Patients with Covid-19

DOI: 10.1056/NEJMoa2103417

BACKGROUND

Thrombosis and inflammation may contribute to morbidity and mortality among patients with coronavirus disease 2019 (Covid-19). We hypothesized that therapeutic-dose anticoagulation would improve outcomes in critically ill patients with Covid-19.

METHODS

In an open-label, adaptive, multiplatform, randomized clinical trial, critically ill patients with severe Covid-19 were randomly assigned to a pragmatically defined regimen of either therapeutic-dose anticoagulation with heparin or pharmacologic thromboprophylaxis in accordance with local usual care. The primary outcome was organ support–free days, evaluated on an ordinal scale that combined in-hospital death (assigned a value of −1) and the number of days free of cardiovascular or respiratory organ support up to day 21 among patients who survived to hospital discharge.

RESULTS

The trial was stopped when the prespecified criterion for futility was met for therapeutic-dose anticoagulation. Data on the primary outcome were available for 1098 patients (534 assigned to therapeutic-dose anticoagulation and 564 assigned to usual-care thromboprophylaxis). The median value for organ support–free days was 1 (interquartile range, −1 to 16) among the patients assigned to therapeutic-dose anticoagulation and was 4 (interquartile range, −1 to 16) among the patients assigned to usual-care thromboprophylaxis (adjusted proportional odds ratio, 0.83; 95% credible interval, 0.67 to 1.03; posterior probability of futility [defined as an odds ratio <1.2], 99.9%). The percentage of patients who survived to hospital discharge was similar in the two groups (62.7% and 64.5%, respectively; adjusted odds ratio, 0.84; 95% credible interval, 0.64 to 1.11). Major bleeding occurred in 3.8% of the patients assigned to therapeutic-dose anticoagulation and in 2.3% of those assigned to usual-care pharmacologic thromboprophylaxis.

CONCLUSIONS

In critically ill patients with Covid-19, an initial strategy of therapeutic-dose anticoagulation with heparin did not result in a greater probability of survival to hospital discharge or a greater number of days free of cardiovascular or respiratory organ support than did usual-care pharmacologic thromboprophylaxis.

✦ Heparin Resistance — Clinical Perspectives and Management Strategies

DOI: 10.1056/NEJMra2104091

Review article

Drug resistance is defined as the lack of expected response to a standard therapeutic dose of a drug or as resistance resulting from biologic changes in the target, as occurs in antibiotic resistance. Heparin resistance, the failure to achieve a specified anticoagulation level despite the use of what is considered to be an adequate dose of heparin, is neither well understood nor well defined. Heparin resistance usually refers to an effect of unfractionated heparin, for which doses are measured and adjusted, rather than low-molecular-weight heparin, which is not routinely monitored with laboratory testing. Although it is infrequently invoked in inpatient settings, heparin resistance has been reported in critically ill patients with coronavirus disease 2019 (Covid-19) who are at high risk for thrombosis. This review provides a clinical summary of heparin resistance and potential management strategies.

✦ Placement of a Double-Lumen Endotracheal Tube

Une vidéo didactique sur la mise en place d’une sonde double-lumière, ses indications & plus encore!

Placement of a double-lumen endotracheal tube is an airway-management technique that permits isolation and selective ventilation of a single lung. Double-lumen tubes are bifurcated into a bronchial lumen and a tracheal lumen, each of which can provide ventilation to a single lung. The bronchial lumen is longer and has a distal opening that allows it to be placed in either the right or the left main bronchus. The tracheal lumen is designed to be placed above the carina. Each lumen has a color-coded cuff. The cuff on the bronchial lumen is often blue, and the cuff on the tracheal lumen is often clear. Both lumens are connected to the breathing circuit by means of a Y-connector.

✦ Aspergillus Infections

DOI: 10.1056/NEJMra2027424

Review article

Aspergillus conidia (spores) are ubiquitous in the environment and thus unavoidable. In soil and on other vegetative or moist material, aspergillus species exist as saprobes, digesting dead or dying organic material. This highly competitive environment requires aspergillus species to survive under variable temperature, pH, water, and nutrient conditions. Oxidative damage and environmental antifungal exposure also drive fungal adaptation, and these factors together account for numerous aspects of aspergillus virulence.1,2 The vast majority of human encounters with inhaled conidia do not result in measurable colonization. For persons who do acquire and retain conidia, a spectrum of clinically significant outcomes can occur, from asymptomatic colonization to invasive infection (i.e., disease).

Spores from this genus of mold have the appropriate surface charge, hydrophobicity, and size (2 to 5 μm) to propagate by transfer in air, colonizing airways in the pulmonary tree and sinuses or leading to cutaneous or ocular infection. After inhalation of airborne fungal conidia, clinical manifestations of disease are largely dependent on the host immune response. A wide range of clinical syndromes can be observed. Hypersensitivity to inhaled airborne conidia causes allergic bronchopulmonary aspergillosis or asthma with fungal sensitization, whereas an aspergillus fungus ball (aspergilloma) or chronic pulmonary aspergillosis develops more frequently in persons with structural lung disease. Invasive infection is the most devastating form of disease and is primarily observed in persons with clinically significant immunosuppression. Small foci of growth are unchecked, and vegetative hyphae penetrate tissue planes and blood vessels, with the opportunity for hematogenous spread and dissemination to multiple organ systems. New risk factors, such as a stay in the intensive care unit (ICU), influenza or severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) infection, and chimeric antigen receptor T-cell (CAR-T) therapy, have recently been observed.

Despite advances in antifungal prophylactic strategies, diagnostic tests, and treatments, morbidity and mortality related to invasive aspergillosis remain high. With this review, we report progress in the understanding of this infection, building on the 2009 review in the Journal.

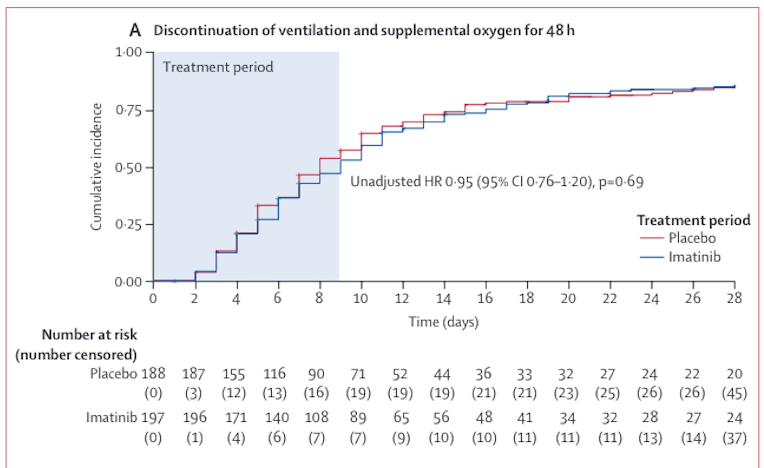

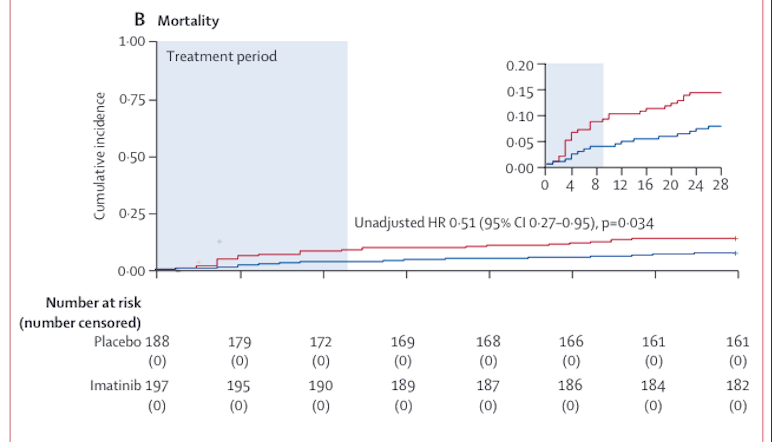

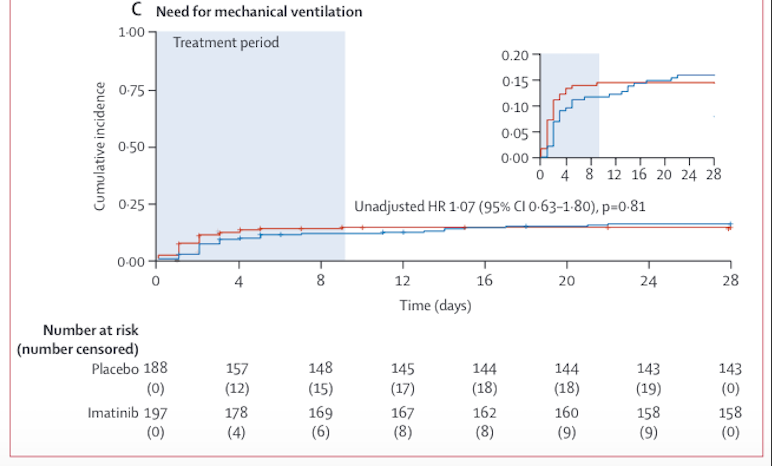

✦ Imatinib in patients with severe COVID-19: a randomised, double-blind, placebo-controlled, clinical trial

DOI: 10.1016/S2213-2600(21)00237-X

Imatinib (ITK) pourrait inhiber l’oedème pulmonaire et ainsi diminuer l’hypoxie pulmonaire dans le Covid 19. Essai contrôlé randomisé double aveugle. Pas d’effet sur la diminution de la durée de ventilation mécanique ni d’oxygénorequérance ≥ 48h d’affilée.

Résumé article :

Background The major complication of COVID-19 is hypoxaemic respiratory failure from capillary leak and alveolar oedema. Experimental and early clinical data suggest that the tyrosine-kinase inhibitor imatinib reverses pulmonary capillary leak.

Methods This randomised, double-blind, placebo-controlled, clinical trial was done at 13 academic and non-academic teaching hospitals in the Netherlands. Hospitalised patients (aged ≥18 years) with COVID-19, as confirmed by an RT-PCR test for SARS-CoV-2, requiring supplemental oxygen to maintain a peripheral oxygen saturation of greater than 94% were eligible. Patients were excluded if they had severe pre-existing pulmonary disease, had pre-existing heart failure, had undergone active treatment of a haematological or non-haematological malignancy in the previous 12 months, had cytopenia, or were receiving concomitant treatment with medication known to strongly interact with imatinib. Patients were randomly assigned (1:1) to receive either oral imatinib, given as a loading dose of 800 mg on day 0 followed by 400 mg daily on days 1–9, or placebo. Randomisation was done with a computer-based clinical data management platform with variable block sizes (containing two, four, or six patients), stratified by study site. The primary outcome was time to discontinuation of mechanical ventilation and supplemental oxygen for more than 48 consecutive hours, while being alive during a 28-day period. Secondary outcomes included safety, mortality at 28 days, and the need for invasive mechanical ventilation. All efficacy and safety analyses were done in all randomised patients who had received at least one dose of study medication (modified intention-to-treat population). This study is registered with the EU Clinical Trials Register (EudraCT 2020–001236–10).

Findings Between March 31, 2020, and Jan 4, 2021, 805 patients were screened, of whom 400 were eligible and randomly assigned to the imatinib group (n=204) or the placebo group (n=196). A total of 385 (96%) patients (median age 64 years [IQR 56–73]) received at least one dose of study medication and were included in the modified intention-to-treat population. Time to discontinuation of ventilation and supplemental oxygen for more than 48 h was not significantly different between the two groups (unadjusted hazard ratio [HR] 0·95 [95% CI 0·76–1·20]). At day 28, 15 (8%) of 197 patients had died in the imatinib group compared with 27 (14%) of 188 patients in the placebo group (unadjusted HR 0·51 [0·27–0·95]). After adjusting for baseline imbalances between the two groups (sex, obesity, diabetes, and cardiovascular disease) the HR for mortality was 0·52 (95% CI 0·26–1·05). The HR for mechanical ventilation in the imatinib group compared with the placebo group was 1·07 (0·63–1·80; p=0·81). The median duration of invasive mechanical ventilation was 7 days (IQR 3–13) in the imatinib group compared with 12 days (6–20) in the placebo group (p=0·0080). 91 (46%) of 197 patients in the imatinib group and 82 (44%) of 188 patients in the placebo group had at least one grade 3 or higher adverse event. The safety evaluation revealed no imatinib-associated adverse events.

Interpretation The study failed to meet its primary outcome, as imatinib did not reduce the time to discontinuation of ventilation and supplemental oxygen for more than 48 consecutive hours in patients with COVID-19 requiring supplemental oxygen. The observed effects on survival (although attenuated after adjustment for baseline imbalances) and duration of mechanical ventilation suggest that imatinib might confer clinical benefit in hospitalised patients with COVID-19, but further studies are required to validate these findings.

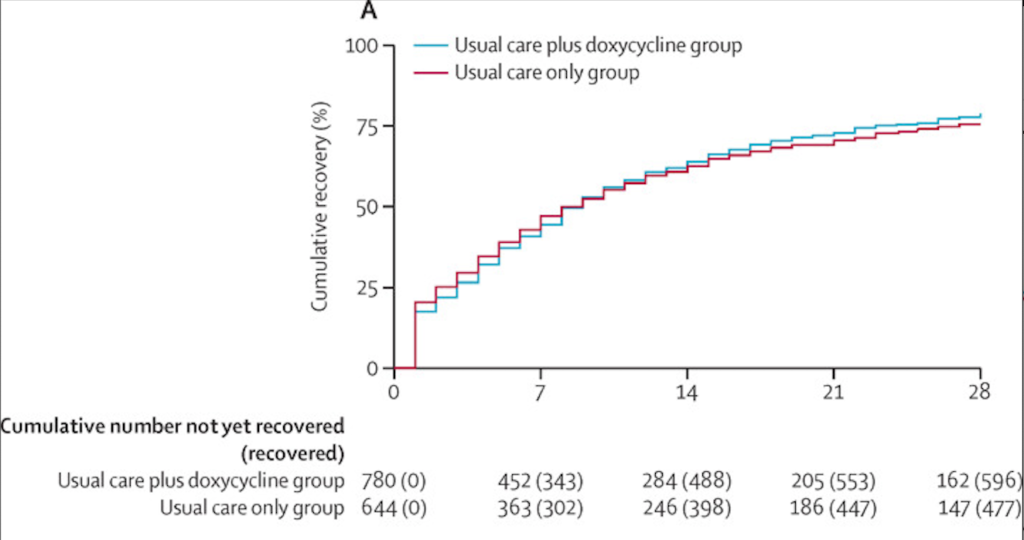

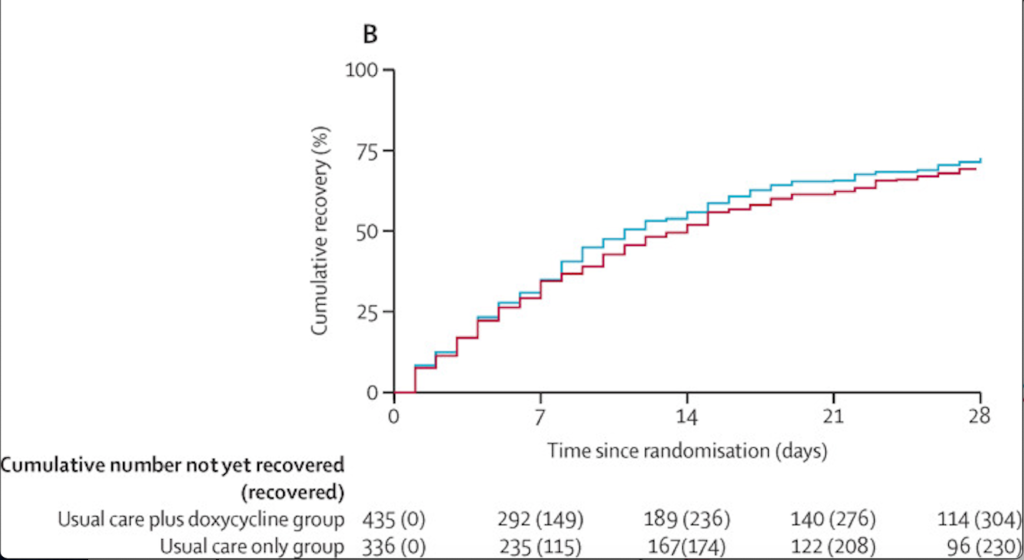

✦ Doxycycline for community treatment of suspected COVID-19 in people at high risk of adverse outcomes in the UK (PRINCIPLE): a randomised, controlled, open-label, adaptive platform trial

DOI: 10.1016/S2213-2600(21)00310-6

Essai contrôlé randomisé de l’intérêt de la doxycycline dans le Covid 19 chez les sujets âgés. Pas de diminution du délai de rétablissement, du nombre d’hospitalisation, ni de la mortalité.

Résumé article :

Background: Doxycycline is often used for treating COVID-19 respiratory symptoms in the community despite an absence of evidence from clinical trials to support its use. We aimed to assess the efficacy of doxycycline to treat suspected COVID-19 in the community among people at high risk of adverse outcomes.

Methods: We did a national, open-label, multi-arm, adaptive platform randomised trial of interventions against COVID-19 in older people (PRINCIPLE) across primary care centres in the UK. We included people aged 65 years or older, or 50 years or older with comorbidities (weakened immune system, heart disease, hypertension, asthma or lung disease, diabetes, mild hepatic impairment, stroke or neurological problem, and self-reported obesity or body-mass index of 35 kg/m2 or greater), who had been unwell (for ≤14 days) with suspected COVID-19 or a positive PCR test for SARS-CoV-2 infection in the community. Participants were randomly assigned using response adaptive randomisation to usual care only, usual care plus oral doxycycline (200 mg on day 1, then 100 mg once daily for the following 6 days), or usual care plus other interventions. The interventions reported in this manuscript are usual care plus doxycycline and usual care only; evaluations of other interventions in this platform trial are ongoing. The coprimary endpoints were time to first self-reported recovery, and hospitalisation or death related to COVID-19, both measured over 28 days from randomisation and analysed by intention to treat.

Findings: The trial opened on April 2, 2020. Randomisation to doxycycline began on July 24, 2020, and was stopped on Dec 14, 2020, because the prespecified futility criterion was met; 2689 participants were enrolled and randomised between these dates. Of these, 2508 (93·3%) participants contributed follow-up data and were included in the primary analysis: 780 (31·1%) in the usual care plus doxycycline group, 948 in the usual care only group (37·8%), and 780 (31·1%) in the usual care plus other interventions group. Among the 1792 participants randomly assigned to the usual care plus doxycycline and usual care only groups, the mean age was 61·1 years (SD 7·9); 999 (55·7%) participants were female and 790 (44·1%) were male. In the primary analysis model, there was little evidence of difference in median time to first self-reported recovery between the usual care plus doxycycline group and the usual care only group (9·6 [95% Bayesian Credible Interval [BCI] 8·3 to 11·0] days vs 10·1 [8·7 to 11·7] days, hazard ratio 1·04 [95% BCI 0·93 to 1·17]). The estimated benefit in median time to first self-reported recovery was 0·5 days [95% BCI -0·99 to 2·04] and the probability of a clinically meaningful benefit (defined as ≥1·5 days) was 0·10. Hospitalisation or death related to COVID-19 occurred in 41 (crude percentage 5·3%) participants in the usual care plus doxycycline group and 43 (4·5%) in the usual care only group (estimated absolute percentage difference -0·5% [95% BCI -2·6 to 1·4]); there were five deaths (0·6%) in the usual care plus doxycycline group and two (0·2%) in the usual care only group.

Interpretation: In patients with suspected COVID-19 in the community in the UK, who were at high risk of adverse outcomes, treatment with doxycycline was not associated with clinically meaningful reductions in time to recovery or hospital admissions or deaths related to COVID-19, and should not be used as a routine treatment for COVID-19.

✦ Titre : Variations in end-of-life practices in intensive care units worldwide (Ethicus-2): a prospective observational study

DOI: 10.1016/S2213-2600(21)00261-7

Analyse des variation mondiales des pratiques de fin de vie en réanimation (échec de réanimation, limitation des traitements, arrêt des traitements, raccourcissement du processus de mort). Etude observationnelle prospective multi-nationale, avec patients adultes décédés en réanimation ou soumis à LATA.

Résumé article :

Background: End-of-life practices vary among intensive care units (ICUs) worldwide. Differences can result in variable use of disproportionate or non-beneficial life-sustaining interventions across diverse world regions. This study investigated global disparities in end-of-life practices.

Methods: In this prospective, multinational, observational study, consecutive adult ICU patients who died or had a limitation of life-sustaining treatment (withholding or withdrawing life-sustaining therapy and active shortening of the dying process) during a 6-month period between Sept 1, 2015, and Sept 30, 2016, were recruited from 199 ICUs in 36 countries. The primary outcome was the end-of-life practice as defined by the end-of-life categories: withholding or withdrawing life-sustaining therapy, active shortening of the dying process, or failed cardiopulmonary resuscitation (CPR). Patients with brain death were included in a separate predefined end-of-life category. Data collection included patient characteristics, diagnoses, end-of-life decisions and their timing related to admission and discharge, or death, with comparisons across different regions. Patients were studied until death or 2 months from the first limitation decision.

Findings: Of 87 951 patients admitted to ICU, 12 850 (14·6%) were included in the study population. The number of patients categorised into each of the different end-of-life categories were significantly different for each region (p<0·001). Limitation of life-sustaining treatment occurred in 10 401 patients (11·8% of 87 951 ICU admissions and 80·9% of 12 850 in the study population). The most common limitation was withholding life-sustaining treatment (5661 [44·1%]), followed by withdrawing life-sustaining treatment (4680 [36·4%]). More treatment withdrawing was observed in Northern Europe (1217 [52·8%] of 2305) and Australia/New Zealand (247 [45·7%] of 541) than in Latin America (33 [5·8%] of 571) and Africa (21 [13·0%] of 162). Shortening of the dying process was uncommon across all regions (60 [0·5%]). One in five patients with treatment limitations survived hospitalisation. Death due to failed CPR occurred in 1799 (14%) of the study population, and brain death occurred in 650 (5·1%). Failure of CPR occurred less frequently in Northern Europe (85 [3·7%] of 2305), Australia/New Zealand (23 [4·3%] of 541), and North America (78 [8·5%] of 918) than in Africa (106 [65·4%] of 162), Latin America (160 [28·0%] of 571), and Southern Europe (590 [22·5%] of 2622). Factors associated with treatment limitations were region, age, and diagnoses (acute and chronic), and country end-of-life legislation.

Interpretation: Limitation of life-sustaining therapies is common worldwide with regional variability. Withholding treatment is more common than withdrawing treatment. Variations in type, frequency, and timing of end-of-life decisions were observed. Recognising regional differences and the reasons behind these differences might help improve end-of-life care worldwide.

✦ Efficacy and safety of suvratoxumab for prevention of Staphylococcus aureus ventilator-associated pneumonia (SAATELLITE): a multicentre, randomised, double-blind, placebo-controlled, parallel-group, phase 2 pilot trial

DOI: 10.1016/S1473-3099(20)30995-6

Les anticorps monoclonaux sont un traitement prometteur dans l’épargne des antibiotiques. Evaluation de l’efficacité du suvratoxumab dans pour réduire l’incidence des PAVM à staph aureus chez les patients colonisés. Essai phase 2 multicentrique randomisé double aveugle. Résultats non significatifs.

Résumé article :

Background: Staphylococcus aureus remains a common cause of ventilator-associated pneumonia, with little change in incidence over the past 15 years. We aimed to evaluate the efficacy of suvratoxumab, a monoclonal antibody targeting the α toxin, in reducing the incidence of S aureus pneumonia in patients in the intensive care unit (ICU) who are on mechanical ventilation.

Methods: We did a multicentre, randomised, double-blind, placebo-controlled, parallel-group, phase 2 pilot trial at 31 hospitals in Belgium, the Czech Republic, France, Germany, Greece, Hungary, Portugal, Spain, and Switzerland. Eligible patients were in the ICU, aged ≥18 years, were intubated and on mechanical ventilation, were positive for S aureus colonisation of the lower respiratory tract, as assessed by quantitative PCR (qPCR) analysis of endotracheal aspirate, and had not been diagnosed with new-onset pneumonia. Patients were excluded if they had confirmed or suspected acute ongoing staphylococcal disease; had received antibiotics for S aureus infection for more than 48 h within 72 h of randomisation; had a Clinical Pulmonary Infection Score of 6 or higher; had an acute physiology and chronic health evaluation II score of 25 or higher with a Glasgow coma scale (GCS) score of more than 5, or an acute physiology and chronic health evaluation II score of at least 30 with a GCS score of 5 or less; had a Sequential Organ Failure Assessment score of 9 or higher; or had active pulmonary disease that would impair the ability to diagnose pneumonia. Colonised patients were randomly assigned (1:1:1), by use of an interactive voice or web response system, to receive either a single intravenous infusion of suvratoxumab 2000 mg, suvratoxumab 5000 mg, or placebo. Randomisation was done in blocks of size four, stratified by country and by whether patients had received systemic antibiotics for S aureus infection. Patients, investigators, and study staff involved in the treatment or clinical evaluation of patients were masked to patient assignment. The primary efficacy endpoint was the incidence of S aureus pneumonia at 30 days, as determined by a masked independent endpoint adjudication committee, in all patients who received their assigned treatment (modified intention-to-treat [ITT] population). Primary safety endpoints were the incidence of treatment-emergent adverse events at 30 days, 90 days, and 190 days after treatment, and the incidence of treatment-emergent serious adverse events, adverse events of special interest, and new-onset chronic disease at 190 days after treatment. All primary safety endpoints were assessed in the modified ITT population.

Findings: Between Oct 10, 2014, and April 1, 2018, 767 patients were screened, of whom 213 patients with confirmed S aureus colonisation of the lower respiratory tract were randomly assigned to the suvratoxumab 2000 mg group (n=15), the suvratoxumab 5000 mg group (n=96), or the placebo group (n=102). Two patients in the placebo group did not receive treatment after randomisation because their clinical conditions changed and they no longer met the eligibility criteria for dosing. As adjudicated by the data monitoring committee at an interim analysis, the suvratoxumab 2000 mg group was discontinued on the basis of predefined pharmacokinetic criteria. At 30 days after treatment, 17 (18%) of 96 patients in the suvratoxumab 5000 mg group and 26 (26%) of 100 patients in the placebo group had developed S aureus pneumonia (relative risk reduction 31·9% [90% CI -7·5 to 56·8], p=0·17). The incidence of treatment-emergent adverse events at 30 days were similar between the suvratoxumab 5000 mg group (87 [91%]) and the placebo group (90 [90%]). The incidence of treatment-emergent serious adverse events at 30 days were also similar between the suvratoxumab 5000 mg group (36 [38%]) and the placebo group (32 [32%]). No significant difference in the incidence of treatment-emergent adverse events between the two groups at 90 days (89 [93%] in the suvratoxumab 5000 mg group vs 92 [92%] in the placebo group) and at 190 days (93 [94%] vs 93 [93%]) was observed. 40 (40%) patients in the placebo group and 50 (52%) in the suvratoxumab 5000 mg group had a serious adverse event at 190 days. In the suvratoxumab 5000 mg group, one (1%) patient reported at least one treatment-emergent serious adverse event related to treatment, two (2%) patients reported an adverse event of special interest, and two (2%) reported a new-onset chronic disease.

Interpretation: In patients in the ICU receiving mechanical ventilation with qPCR-confirmed S aureus colonisation of the lower respiratory tract, the incidence of S aureus pneumonia at 30 days was not significantly lower following treatment with 5000 mg suvratoxumab than with placebo. Despite these negative results, monoclonal antibodies still represent one promising therapeutic option to reduce antibiotic consumption that require further exploration and studies.

✦ Effect of Low-Normal vs High-Normal Oxygenation Targets on Organ Dysfunction in Critically Ill Patients. A Randomized Clinical Trial

DOI: 10.1001/jama.2021.13011

Ça fait maintenant un moment qu’on entend parler des dégâts de l’hyperoxie (toxicité pulmonaire, effet ischémie-reperfusion, vasoC systémique -> conséquences qui iraient plutôt contre une bonne oxygénation tissulaire) mais également de ses bénéfices potentiels (vasoC systémique bienvenue dans un état de SIRS par ex, effet antibactérien de l’hyperoxie?). Alors, est-ce qu’on retrouve moins de défaillances avec un régime d’oxygénation moindre en soins critiques? Les études préexistantes sur le sujet sont inhomogènes (↘︎ mortalité pour la majorité; NS pour une grande partie et + d’ischémies mésentériques dans le groupe “normal-bas” dans une).

Dans cette étude hollandaise, chez les patients de soins critiques en SIRS,, avoir un niveau d’objectif d’oxygénation “normal-bas” (PaO2 60-90mmHg) VS « normal-haut » (PaO2 105-135mmHg) ne ressortait pas comme protecteur sur la dysfonction d’organes à J14 selon le SOFARANK.

IMPORTANCE Hyperoxemia may increase organ dysfunction in critically ill patients, but optimal oxygenation targets are unknown.

OBJECTIVE To determine whether a low-normal PaO2 target compared with a high-normal target reduces organ dysfunction in critically ill patients with systemic inflammatory response syndrome (SIRS).

DESIGN, SETTING, AND PARTICIPANTS Multicenter randomized clinical trial in 4 intensive care units in the Netherlands. Enrollment was from February 2015 to October 2018, with end of follow-up to January 2019, and included adult patients admitted with 2 or more SIRS criteria and expected stay of longer than 48 hours. A total of 9925 patients were screened for eligibility, of whom 574 fulfilled the enrollment criteria and were randomized.

INTERVENTIONS Target PaO2 ranges were 8 to 12 kPa (low-normal, n = 205) and 14 to 18 kPa (high-normal, n = 195). An inspired oxygen fraction greater than 0.60 was applied only when clinically indicated.

MAIN OUTCOMES AND MEASURES Primary end point was SOFARANK, a ranked outcome of nonrespiratory organ failure quantified by the nonrespiratory components of the Sequential Organ Failure Assessment (SOFA) score, summed over the first 14 study days. Participants were ranked from fastest organ failure improvement (lowest scores) to worsening organ failure or death (highest scores). Secondary end points were duration of mechanical ventilation, in-hospital mortality, and hypoxemic measurements.

RESULTS Among the 574 patients who were randomized, 400 (70%) were enrolled within 24 hours (median age, 68 years; 140 women [35%]), all of whom completed the trial. The median PaO2 difference between the groups was −1.93 kPa (95% CI, −2.12 to −1.74; P < .001). The median SOFARANK score was −35 points in the low-normal PaO2 group vs −40 in the high-normal PaO2 group (median difference, 10 [95% CI, 0 to 21]; P = .06). There was no significant difference in median duration of mechanical ventilation (3.4 vs 3.1 days; median difference, −0.15 [95% CI, −0.88 to 0.47]; P = .59) and in-hospital mortality (32% vs 31%; odds ratio, 1.04 [95% CI, 0.67 to 1.63]; P = .91). Mild hypoxemic measurements occurred more often in the low-normal group (1.9% vs 1.2%; median difference, 0.73 [95% CI, 0.30 to 1.20]; P < .001).

Acute kidney failure developed in 20 patients (10%) in the low-normal PaO2 group and 21 patients (11%) in the high-normal PaO2 group, and acute myocardial infarction in 6 patients (2.9%) in the low-normal PaO2 group and 7 patients (3.6%) in the high-normal PaO2 group.

CONCLUSIONS AND RELEVANCE Among critically ill patients with 2 or more SIRS criteria, treatment with a low-normal PaO2 target compared with a high-normal PaO2 target did not result in a statistically significant reduction in organ dysfunction. However, the study may have had limited power to detect a smaller treatment effect than was hypothesized.

✦ Effect of Intravenous Fluid Treatment With a Balanced Solutionvs 0.9% Saline Solution on Mortality in Critically Ill Patients. The BaSICS Randomized Clinical Trial

DOI: 10.1001/jama.2021.11684

La composition des solutés de remplissages; sujet indémodable.

Ici grosse étude de >11000 patients randomisés: NaCl 0,9% vs Plasmalyte avec toujours le même rationnel qui est qu’un soluté balancé avec une composition plus pauvre en chlore, plus isotonique, ferait probablement moins de dégâts que ce bon vieux sérum phy.

Dans cette étude brésilienne, l’utilisation de solutés balancés vs NaCl 0,9% chez des patients de soins critiques n’était pas associée avec une baisse de mortalité à 90j.

IMPORTANCE Intravenous fluids are used for almost all intensive care unit (ICU) patients. Clinical and laboratory studies have questioned whether specific fluid types result in improved outcomes, including mortality and acute kidney injury.

OBJECTIVE To determine the effect of a balanced solution vs saline solution (0.9% sodium chloride) on 90-day survival in critically ill patients.

DESIGN, SETTING, AND PARTICIPANTS Double-blind, factorial, randomized clinical trialconducted at 75 ICUs in Brazil. Patients who were admitted to the ICU with at least 1 risk factor for worse outcomes, who required at least 1 fluid expansion, and who were expected to remain in the ICU for more than 24 hours were randomized between May 29, 2017, and March 2, 2020; follow-up concluded on October 29, 2020. Patients were randomized to 2 different fluid types (a balanced solution vs saline solution reported in this article) and 2 different infusion rates (reported separately).

INTERVENTIONS Patients were randomly assigned 1:1 to receive either a balanced solution (n = 5522) or 0.9% saline solution (n = 5530) for all intravenous fluids.

MAIN OUTCOMES AND MEASURES The primary outcome was 90-day survival.

RESULTS Among 11 052 patients who were randomized, 10 520 (95.2%) were available for the analysis (mean age, 61.1 [SD, 17] years; 44.2% were women). There was no significant interaction between the 2 interventions (fluid type and infusion speed; P = .98). Planned surgical admissions represented 48.4% of all patients. Of all the patients, 60.6% had hypotension or vasopressor use and 44.3% required mechanical ventilation at enrollment. Patients in both groups received a median of 1.5 L of fluid during the first day after enrollment. By day 90, 1381 of 5230 patients (26.4%) assigned to a balanced solution died vs 1439 of 5290 patients (27.2%) assigned to saline solution (adjusted hazard ratio, 0.97 [95% CI, 0.90-1.05]; P = .47). There were no unexpected treatment-related severe adverse events in either group.

CONCLUSION AND RELEVANCE Among critically ill patients requiring fluid challenges, use of a balanced solution compared with 0.9% saline solution did not significantly reduce 90-day mortality. The findings do not support the use of this balanced solution.

✦ Effect of Slower vs Faster Intravenous Fluid Bolus Rates on Mortality in Critically Ill Patients. The BaSICS Randomized Clinical Trial

DOI: 10.1001/jama.2021.11444

Dans la famille BaSICS je demande le petit frère! Les auteurs ont repris les mêmes patients que plus haut pour cette fois étudier non plus la composition mais la vitesse à laquelle on administre nos solutés.

Rationnel: une administration en débit libre améliorerait les paramètres macro-hémodynamiques que sont la PA et le DC plus rapidement mais pourrait aussi s’étendre plus rapidement en intracellulaire, non sans conséquence pour les organes, en plus d’altérer les échanges gazeux et diminuer la tolérance à l’effort.

Dans cette étude brésilienne chez des patients de soins critiques, le fait de perfuser un soluté plus lentement en 999mL/h vs 333mL/h n’était pas associé à une diminution de la mortalité à 90j.

Un effet positif sur un des CJS qu’est l’utilisation de NAD font développer les auteurs sur la potentielle baisse d’élastance artérielle après un “bolus” de remplissage, intéressant!

IMPORTANCE Slower intravenous fluid infusion rates could reduce the formation of tissue oedema and organ dysfunction in critically ill patients; however, there are no data to support different infusion rates during fluid challenges for important outcomes such as mortality.

OBJECTIVE To determine the effect of a slower infusion rate vs control infusion rate on 90-day survival in patients in the intensive care unit (ICU).

DESIGN, SETTING, AND PARTICIPANTS Unblinded randomized factorial clinical trial in 75 ICUs in Brazil, involving 11 052 patients requiring at least 1 fluid challenge and with 1 risk factor for worse outcomes were randomized from May 29, 2017, to March 2, 2020. Follow-up was concluded on October 29, 2020. Patients were randomized to 2 different infusion rates (reported in this article) and 2 different fluid types (balanced fluids or saline, reported separately).

INTERVENTIONS Patients were randomized to receive fluid challenges at 2 different infusion rates; 5538 to the slower rate (333 mL/h) and 5514 to the control group (999 mL/h). Patients were also randomized to receive balanced solution or 0.9% saline using a factorial design.

MAIN OUTCOMES AND MEASURES The primary end point was 90-day survival.

RESULTS Of all randomized patients, 10 520 (95.2%) were analyzed (mean age, 61.1 years [SD, 17.0 years]; 44.2% were women) after excluding duplicates and consent withdrawals. Patients assigned to the slower rate received a mean of 1162 mL on the first day vs 1252 mL for the control group. By day 90, 1406 of 5276 patients (26.6%) in the slower rate group had died vs 1414 of 5244 (27.0%) in the control group (adjusted hazard ratio, 1.03; 95% CI, 0.96-1.11; P = .46). There was no significant interaction between fluid type and infusion rate (P = .98).

CONCLUSIONS AND RELEVANCE Among patients in the intensive care unit requiring fluid challenges, infusing at a slower rate compared with a faster rate did not reduce 90-day mortality. These findings do not support the use of a slower infusion rate.

✦ Association of Epidural Analgesia During Labor and Delivery With Autism Spectrum Disorder in Offspring

DOI: 10.1001/jama.2021.14986

La péridurale et l’autisme. Article intéressant à analyser et décortiquer! Dans cette étude américaine, le fait d’avoir accouché sous péridurale est associé à un risque d’autisme pour l’enfant (en fonction des facteurs qu’on choisit de prendre en compte en analyse multivariée!)

Une autre étude parue le même mois sur le sujet, elle, sort NS. Abstract disponible ici: DOI:10.1001/jama.2021.12655

IMPORTANCE Evidence from studies investigating the association of epidural analgesia use during labor and delivery with risk of autism spectrum disorder (ASD) in offspring is conflicting.

OBJECTIVE To assess the association of maternal use of epidural analgesia during labor and delivery with ASD in offspring using a large population-based data set with clinical data on ASD case status.

DESIGN, SETTING, AND PARTICIPANTS This population-based retrospective cohort study included term singleton children born in British Columbia, Canada, between April 1, 2000, and December 31, 2014. Stillbirths and cesarean deliveries were excluded. Clinical ASD diagnostic data were obtained from the British Columbia Autism Assessment Network and the British Columbia Ministry of Education. All children were followed up until clinical diagnosis of ASD, death, or the study end date of December 31, 2016.

EXPOSURES Use of epidural analgesia during labor and delivery.

MAIN OUTCOMES AND MEASURES A clinical diagnosis of ASD made by pediatricians, psychiatrists, and psychologists with specialty training to assess ASD. Cox proportional hazards models were used to estimate the hazard ratio of epidural analgesia use and ASD. Models were adjusted for maternal sociodemographics; maternal conditions during pregnancy; labor, delivery, and antenatal care characteristics; infant sex; gestational age; and status of small or large for gestational age. A conditional logistic regression model matching women with 2 births or more and discordance in ASD status of the offspring also was performed.

RESULTS Of the 388 254 children included in the cohort (49.8% female; mean gestational age, 39.2 [SD, 1.2] weeks; mean follow-up, 9.05 [SD, 4.3] years), 5192 were diagnosed with ASD (1.34%) and 111 480 (28.7%) were exposed to epidural analgesia. A diagnosis of ASD was made for 1710 children (1.53%) among the 111 480 deliveries exposed to epidural analgesia (94 157 women) vs a diagnosis of ASD in 3482 children (1.26%) among the 276 774 deliveries not exposed to epidural analgesia (192 510 women) (absolute risk difference, 0.28% [95% CI, 0.19%-0.36%]). The unadjusted hazard ratio was 1.32 (95% CI, 1.24-1.40) and the fully adjusted hazard ratio was 1.09 (95% CI, 1.00-1.15). There was no statistically significant association of epidural analgesia use during labor and delivery with ASD in the within-woman matched conditional logistic regression (839/1659 [50.6%] in the exposed group vs 1905/4587 [41.5%] in the unexposed group; fully adjusted hazard ratio, 1.07 [95% CI, 0.87-1.30]).

CONCLUSIONS AND RELEVANCE In this population-based study, maternal epidural analgesia use during labor and delivery was associated with a small increase in the risk of autism spectrum disorder in offspring that met the threshold for statistical significance. However, given the likelihood of residual confounding that may account for the results, these findings do not provide strong supporting evidence for this association.

✦ Association of Tramadol vs Codeine Prescription Dispensation With Mortality and Other Adverse Clinical Outcomes

DOI: 10.1001/jama.2021.15255

Ces résultats en ont étonné plus d’un!

Le Tramadol pas si safe que ça comparé à la codéine? Selon cette étude espagnole il serait associé à plus de mortalité, complications cardiovasc, fractures, sans différence retrouvée entre autres sur la confusion et l’addiction aux opioïdes..

IMPORTANCE Although tramadol is increasingly used to manage chronic noncancer pain, few safety studies have compared it with other opioids.

OBJECTIVE To assess the associations of tramadol, compared with codeine, with mortality and other adverse clinical outcomes as used in outpatient settings.

DESIGN, SETTING, AND PARTICIPANTS Retrospective, population-based, propensity score–matched cohort study using a primary care database with routinely collected medical records and pharmacy dispensations covering more than 80% of the population of Catalonia, Spain (≈6 million people). Patients 18 years or older with 1 or more year of available data and dispensation of tramadol or codeine (2007-2017) were included and followed up to December 31, 2017.

EXPOSURES New prescription dispensation of tramadol or codeine (no dispensation in the previous year).

MAIN OUTCOMES AND MEASURES Outcomes studied were all-cause mortality, cardiovascular events, fractures, constipation, delirium, falls, opioid abuse/dependence, and sleep disorders within 1 year after the first dispensation. Absolute rate differences (ARDs) and hazard ratios (HRs) with 95% confidence intervals were calculated using cause-specific Cox models.

RESULTS Of the 1 093 064 patients with a tramadol or codeine dispensation during the study period (326 921 for tramadol, 762 492 for codeine, 3651 for both drugs concomitantly), study exclusions and propensity score matching (mean age, 53.1 [SD, 16.1] years; 57.3% women). Compared with codeine, tramadol dispensation was significantly associated with ASD (1.34%) and 111 480 (28.7%) were exposed to epidural analgesia. A diagnosis of ASD was made for 1710 children (1.53%) among the 111 480 deliveries exposed to epidural analgesia (94 157 women) vs a diagnosis of ASD in 3482 children (1.26%) among the 276 774 deliveries not exposed to epidural analgesia (192 510 women) (absolute risk difference, 0.28% [95% CI, 0.19%-0.36%]). The unadjusted hazard ratio was 1.32 (95% CI, 1.24-1.40) and the fully adjusted hazard ratio was 1.09 (95% CI, 1.00-1.15). There was no statistically significant association of epidural analgesia use during labor and delivery with ASD in the within-woman matched conditional logistic regression (839/1659 [50.6%] in the exposed group vs 1905/4587 [41.5%] in the unexposed group; fully adjusted hazard ratio, 1.07 [95% CI, 0.87-1.30]).

CONCLUSIONS AND RELEVANCE In this population-based study, maternal epidural analgesia use during labor and delivery was associated with a small increase in the risk of autism spectrum disorder in offspring that met the threshold for statistical significance. However, given the likelihood of residual confounding that may account for the results, these findings do not provide strong supporting evidence for this association.

✦ Effect of Lower Tidal Volume Ventilation Facilitated by Extracorporeal Carbon Dioxide Removal vs Standard Care Ventilation on 90-Day Mortality in Patients With Acute Hypoxemic Respiratory Failure. The REST Randomized Clinical Trial

DOI: 10.1001/jama.2021.13374

Chez des patients en DRA qu’on a intubé/ventilé et qu’on ventile avec un Vt protecteur de 6mL/kg et une Pp max de 30mmHg, réduire encore nos volumes aboutirait rapidement à une hypercapnie respiratoire et toutes ses conséquences. Cette étude anglaise visait à étudier si en mettant ces patients sous ECMO VV, ce qui leur permettait de pouvoir réduire le Vt de moitié tout en éliminant le CO2 en extra-corporel, on pouvait faire mieux sur la mortalité et d’autres CJS tels que la durée de VM. Malheureusement l’étude a été arrêtée pour futilité devant le nombre d’hémorragies intracrâniennes et le résultat disponible était NS!

IMPORTANCE In patients who require mechanical ventilation for acute hypoxemic respiratory failure, further reduction in tidal volumes, compared with conventional low tidal volume ventilation, may improve outcomes.

OBJECTIVE To determine whether lower tidal volume mechanical ventilation using extracorporeal carbon dioxide removal improves outcomes in patients with acute hypoxemic respiratory failure.

DESIGN, SETTING, AND PARTICIPANTS This multicenter, randomized, allocation-concealed, open-label, pragmatic clinical trial enrolled 412 adult patients receiving mechanical ventilation for acute hypoxemic respiratory failure, of a planned sample size of 1120, between May 2016 and December 2019 from 51 intensive care units in the UK. Follow-up ended on March 11, 2020.

INTERVENTIONS Participants were randomized to receive lower tidal volume ventilation facilitated by extracorporeal carbon dioxide removal for at least 48 hours (n = 202) or standard care with conventional low tidal volume ventilation (n = 210).

MAIN OUTCOMES AND MEASURES The primary outcome was all-cause mortality 90 days after randomization. Prespecified secondary outcomes included ventilator-free days at day 28 and adverse event rates.

RESULTS Among 412 patients who were randomized (mean age, 59 years; 143 [35%] women), 405 (98%) completed the trial. The trial was stopped early because of futility and feasibility following recommendations from the data monitoring and ethics committee. The 90-day mortality rate was 41.5% in the lower tidal volume ventilation with extracorporeal carbon dioxide removal group vs 39.5% in the standard care group (risk ratio, 1.05 [95% CI, 0.83-1.33]; difference, 2.0% [95% CI, −7.6% to 11.5%]; P = .68). There were significantly fewer mean ventilator-free days in the extracorporeal carbon dioxide removal group compared with the standard care group (7.1 [95% CI, 5.9-8.3] vs 9.2 [95% CI, 7.9-10.4] days; mean difference, −2.1 [95% CI, −3.8 to −0.3]; P = .02). Serious adverse events were reported for 62 patients (31%) in the extracorporeal carbon dioxide removal group and 18 (9%) in the standard care group, including intracranial hemorrhage in 9 patients (4.5%) vs 0 (0%) and bleeding at other sites in 6 (3.0%) vs 1 (0.5%) in the extracorporeal carbon dioxide removal group vs the control group. Overall, 21 patients experienced 22 serious adverse events related to the study device.

CONCLUSIONS AND RELEVANCE Among patients with acute hypoxemic respiratory failure, the use of extracorporeal carbon dioxide removal to facilitate lower tidal volume mechanical ventilation, compared with conventional low tidal volume mechanical ventilation, did not significantly reduce 90-day mortality. However, due to early termination, the study may have been underpowered to detect a clinically important difference.

✦ Effectiveness of therapeutic heparin versus prophylactic heparin on death, mechanical ventilation, or intensive care unit admission in moderately ill patients with covid-19 admitted to hospital: RAPID randomised clinical trial

DOI: 10.1136/bmj.n2400

Quelques lignes de présentations :

Résumé article :

Objective To evaluate the effects of therapeutic heparin compared with prophylactic heparin among moderately ill patients with covid-19 admitted to hospital wards.

Design Randomised controlled, adaptive, open label clinical trial.

Setting 28 hospitals in Brazil, Canada, Ireland, Saudi Arabia, United Arab Emirates, and US.

Participants 465 adults admitted to hospital wards with covid-19 and increased D-dimer levels were recruited between 29 May 2020 and 12 April 2021 and were randomly assigned to therapeutic dose heparin (n=228) or prophylactic dose heparin (n=237).

Interventions Therapeutic dose or prophylactic dose heparin (low molecular weight or unfractionated heparin), to be continued until hospital discharge, day 28, or death.

Main outcome measures The primary outcome was a composite of death, invasive mechanical ventilation, non-invasive mechanical ventilation, or admission to an intensive care unit, assessed up to 28 days. The secondary outcomes included all cause death, the composite of all cause death or any mechanical ventilation, and venous thromboembolism. Safety outcomes included major bleeding. Outcomes were blindly adjudicated.Results The mean age of participants was 60 years; 264 (56.8%) were men and the mean body mass index was 30.3 kg/m2. At 28 days, the primary composite outcome had occurred in 37/228 patients (16.2%) assigned to therapeutic heparin and 52/237 (21.9%) assigned to prophylactic heparin (odds ratio 0.69, 95% confidence interval 0.43 to 1.10; P=0.12). Deaths occurred in four patients (1.8%) assigned to therapeutic heparin and 18 patients (7.6%) assigned to prophylactic heparin (0.22, 0.07 to 0.65; P=0.006). The composite of all cause death or any mechanical ventilation occurred in 23 patients (10.1%) assigned to therapeutic heparin and 38 (16.0%) assigned to prophylactic heparin (0.59, 0.34 to 1.02; P=0.06). Venous thromboembolism occurred in two patients (0.9%) assigned to therapeutic heparin and six (2.5%) assigned to prophylactic heparin (0.34, 0.07 to 1.71; P=0.19). Major bleeding occurred in two patients (0.9%) assigned to therapeutic heparin and four (1.7%) assigned to prophylactic heparin (0.52, 0.09 to 2.85; P=0.69).

Conclusions In moderately ill patients with covid-19 and increased D-dimer levels admitted to hospital wards, therapeutic heparin was not significantly associated with a reduction in the primary outcome but the odds of death at 28 days was decreased. The risk of major bleeding appeared low in this trial.

✦ Post-discharge after surgery Virtual Care with Remote Automated Monitoring-1 (PVC-RAM-1) technology versus standard care: randomised controlled trial

DOI: 10.1136/bmj.n2209

Quelques lignes de présentations :

Résumé article :

Objective To determine if virtual care with remote automated monitoring (RAM) technology versus standard care increases days alive at home among adults discharged after non-elective surgery during the covid-19 pandemic.

Design Multicentre randomised controlled trial.

Setting 8 acute care hospitals in Canada.

Participants 905 adults (≥40 years) who resided in areas with mobile phone coverage and were to be discharged from hospital after non-elective surgery were randomised either to virtual care and RAM (n=451) or to standard care (n=454). 903 participants (99.8%) completed the 31 day follow-up.

Intervention Participants in the experimental group received a tablet computer and RAM technology that measured blood pressure, heart rate, respiratory rate, oxygen saturation, temperature, and body weight. For 30 days the participants took daily biophysical measurements and photographs of their wound and interacted with nurses virtually. Participants in the standard care group received post-hospital discharge management according to the centre’s usual care. Patients, healthcare providers, and data collectors were aware of patients’ group allocations. Outcome adjudicators were blinded to group allocation.

Main outcome measures The primary outcome was days alive at home during 31 days of follow-up. The 12 secondary outcomes included acute hospital care, detection and correction of drug errors, and pain at 7, 15, and 30 days after randomisation.Results All 905 participants (mean age 63.1 years) were analysed in the groups to which they were randomised. Days alive at home during 31 days of follow-up were 29.7 in the virtual care group and 29.5 in the standard care group: relative risk 1.01 (95% confidence interval 0.99 to 1.02); absolute difference 0.2% (95% confidence interval −0.5% to 0.9%). 99 participants (22.0%) in the virtual care group and 124 (27.3%) in the standard care group required acute hospital care: relative risk 0.80 (0.64 to 1.01); absolute difference 5.3% (−0.3% to 10.9%). More participants in the virtual care group than standard care group had a drug error detected (134 (29.7%) v 25 (5.5%); absolute difference 24.2%, 19.5% to 28.9%) and a drug error corrected (absolute difference 24.4%, 19.9% to 28.9%). Fewer participants in the virtual care group than standard care group reported pain at 7, 15, and 30 days after randomisation: absolute differences 13.9% (7.4% to 20.4%), 11.9% (5.1% to 18.7%), and 9.6% (2.9% to 16.3%), respectively. Beneficial effects proved substantially larger in centres with a higher rate of care escalation.

Conclusion Virtual care with RAM shows promise in improving outcomes important to patients and to optimal health system function.

✦ Ipsilateral and Simultaneous Comparison of Responses from Acceleromyography- and Electromyography-based Neuromuscular Monitors

DOI: 10.1097/ALN.0000000000003896

The paucity of easy-to-use, reliable objective neuromuscular monitors is an obstacle to universal adoption of routine neuromuscular monitoring. Electromyography (EMG) has been proposed as the optimal neuromuscular monitoring technology since it addresses several acceleromyography limitations. This clinical study compared simultaneous neuromuscular responses recorded from induction of neuromuscular block until recovery using the acceleromyography-based TOF-Watch SX and EMG-based TetraGraph.

Methods

Fifty consenting patients participated. The acceleromyography and EMG devices analyzed simultaneous contractions (acceleromyography) and muscle action potentials (EMG) from the adductor pollicis muscle by synchronization via fiber optic cable link. Bland–Altman analysis described the agreement between devices during distinct phases of neuromuscular block. The primary endpoint was agreement of acceleromyography- and EMG-derived normalized train-of-four ratios greater than or equal to 80%. Secondary endpoints were agreement in the recovery train-of-four ratio range less than 80% and agreement of baseline train-of-four ratios between the devices.

Results

Acceleromyography showed normalized train-of-four ratio greater than or equal to 80% earlier than EMG. When acceleromyography showed train-of-four ratio greater than or equal to 80% (n = 2,929), the bias was 1.3 toward acceleromyography (limits of agreement, –14.0 to 16.6). When EMG showed train-of-four ratio greater than or equal to 80% (n = 2,284), the bias was –0.5 toward EMG (–14.7 to 13.6). In the acceleromyography range train-of-four ratio less than 80% (n = 2,802), the bias was 2.1 (–16.1 to 20.2), and in the EMG range train-of-four ratio less than 80% (n = 3,447), it was 2.6 (–14.4 to 19.6). Baseline train-of-four ratios were higher and more variable with acceleromyography than with EMG.

Conclusions

Bias was lower than in previous studies. Limits of agreement were wider than expected because acceleromyography readings varied more than EMG both at baseline and during recovery. The EMG-based monitor had higher precision and greater repeatability than acceleromyography. This difference between monitors was even greater when EMG data were compared to raw (nonnormalized) acceleromyography measurements. The EMG monitor is a better indicator of adequate recovery from neuromuscular block and readiness for safe tracheal extubation than the acceleromyography monitor.

✦ Smart Glasses for Radial Arterial Catheterization in Pediatric Patients: A Randomized Clinical Trial

DOI: 10.1097/ALN.0000000000003914

Background

Hand–eye coordination and ergonomics are important for the success of delicate ultrasound-guided medical procedures. These can be improved using smart glasses (head-mounted display) by decreasing the head movement on the ultrasound screen. The hypothesis was that the smart glasses could improve the success rate of ultrasound-guided pediatric radial arterial catheterization.

Methods

This prospective, single-blinded, randomized controlled, single-center study enrolled pediatric patients (n = 116, age less than 2 yr) requiring radial artery cannulation during general anesthesia. The participants were randomized into the ultrasound screen group (control) or the smart glasses group. After inducing general anesthesia, ultrasound-guided radial artery catheterization was performed. The primary outcome was the first-attempt success rate. The secondary outcomes included the first-attempt procedure time, the overall complication rate, and operators’ ergonomic satisfaction (5-point scale).

ResultsIn total, 116 children were included in the analysis. The smart glasses group had a higher first-attempt success rate than the control group (87.9% [51/58] vs. 72.4% [42/58]; P = 0.036; odds ratio, 2.78; 95% CI, 1.04 to 7.4; absolute risk reduction, –15.5%; 95% CI, −29.8 to −12.8%). The smart glasses group had a shorter first-attempt procedure time (median, 33 s; interquartile range, 23 to 47 s; range, 10 to 141 s) than the control group (median, 43 s; interquartile range, 31 to 67 s; range, 17 to 248 s; P = 0.007). The overall complication rate was lower in the smart glasses group than in the control group (5.2% [3/58] vs. 29.3% [17/58]; P = 0.001; odds ratio, 0.132; 95% CI, 0.036 to 0.48; absolute risk reduction, 24.1%; 95% CI, 11.1 to 37.2%). The proportion of positive ergonomic satisfaction (4 = good or 5 = best) was higher in the smart glasses group than in the control group (65.5% [38/58] vs. 20.7% [12/58]; P<0.001; odds ratio, 7.3; 95% CI, 3.16 to 16.8; absolute risk reduction, –44.8%; 95% CI, –60.9% to –28.8%).

Conclusions

Smart glasses-assisted ultrasound-guided radial artery catheterization improved the first-attempt success rate and ergonomic satisfaction while reducing the first-attempt procedure time and overall complication rates in small pediatric patients.

✦ Neurolytic Splanchnic Nerve Block and Pain Relief, Survival, and Quality of Life in Unresectable Pancreatic Cancer: A Randomized Controlled Trial

DOI: 10.1097/ALN.0000000000003936

Background

Neurolytic splanchnic nerve block is used to manage pancreatic cancer pain. However, its impact on survival and quality of life remains controversial. The authors’ primary hypothesis was that pain relief would be better with a nerve block. Secondarily, they hypothesized that analgesic use, survival, and quality of life might be affected.

Methods

This randomized, double-blind, parallel-armed trial was conducted in five Chinese centers. Eligible patients suffering from moderate to severe pain conditions were randomly assigned to receive splanchnic nerve block with either absolute alcohol (neurolysis) or normal saline (control). The primary outcome was pain relief measured on a visual analogue scale. Opioid consumption, survival, quality of life, and adverse effects were also documented. Analgesics were managed using a protocol common to all centers. Patients were followed up for 8 months or until death.

Results

Ninety-six patients (48 for each group) were included in the analysis. Pain relief with neurolysis was greater for the first 3 months (largest at the first month; mean difference, 0.7 [95% CI, 0.3 to 1.0]; adjusted P < 0.001) compared with placebo injection. Opioid consumption with neurolysis was lower for the first 5 months (largest at the first month; mean difference, 95.8 [95% CI, 67.4 to 124.1]; adjusted P < 0.001) compared with placebo injection. There was a significant difference in survival (hazard ratio, 1.56 [95% CI, 1.03 to 2.35]; P = 0.036) between groups. A significant reduction in survival in neurolysis was found for stage IV patients (hazard ratio, 1.94 [95% CI, 1.29 to 2.93]; P = 0.001), but not for stage III patients (hazard ratio, 1.08 [95% CI, 0.59 to 1.97]; P = 0.809). No differences in quality of life were observed.

Conclusions

Neurolytic splanchnic nerve block appears to be an effective option for controlling pain and reducing opioid requirements in patients with unresectable pancreatic cancer.

✦ Perioperative Normal Saline Administration and Delayed Graft Function in Patients Undergoing Kidney Transplantation: A Retrospective Cohort Study

DOI: 10.1097/ALN.0000000000003887

Background

Perioperative normal saline administration remains common practice during kidney transplantation. The authors hypothesized that the proportion of balanced crystalloids versus normal saline administered during the perioperative period would be associated with the likelihood of delayed graft function.

Methods

The authors linked outcome data from a national transplant registry with institutional anesthesia records from 2005 to 2015. The cohort included adult living and deceased donor transplants, and recipients with or without need for dialysis before transplant. The primary exposure was the percent normal saline of the total amount of crystalloids administered perioperatively, categorized into a low (less than or equal to 30%), intermediate (greater than 30% but less than 80%), and high normal saline group (greater than or equal to 80%). The primary outcome was the incidence of delayed graft function, defined as the need for dialysis within 1 week of transplant. The authors adjusted for the following potential confounders and covariates: transplant year, total crystalloid volume, surgical duration, vasopressor infusions, and erythrocyte transfusions; recipient sex, age, body mass index, race, number of human leukocyte antigen mismatches, and dialysis vintage; and donor type, age, and sex.

Results

The authors analyzed 2,515 records. The incidence of delayed graft function in the low, intermediate, and high normal saline group was 15.8% (61/385), 17.5% (113/646), and 21% (311/1,484), respectively. The adjusted odds ratio (95% CI) for delayed graft function was 1.24 (0.85 to 1.81) for the intermediate and 1.55 (1.09 to 2.19) for the high normal saline group compared with the low normal saline group. For deceased donor transplants, delayed graft function in the low, intermediate, and high normal saline group was 24% (54/225 [reference]), 28.6% (99/346; adjusted odds ratio, 1.28 [0.85 to 1.93]), and 30.8% (277/901; adjusted odds ratio, 1.52 [1.05 to 2.21]); and for living donor transplants, 4.4% (7/160 [reference]), 4.7% (14/300; adjusted odds ratio, 1.15 [0.42 to 3.10]), and 5.8% (34/583; adjusted odds ratio, 1.66 [0.65 to 4.25]), respectively.

Conclusions

High percent normal saline administration is associated with delayed graft function in kidney transplant recipients.

✦ Reversing Rivaroxaban Anticoagulation as Part of a Multimodal Hemostatic Intervention in a Polytrauma Animal Model

DOI: 10.1097/ALN.0000000000003899

Background

Life-threatening bleeding requires prompt reversal of the anticoagulant effects of factor Xa inhibitors. This study investigated the effectiveness of four-factor prothrombin complex concentrate in treating trauma-related hemorrhage with rivaroxaban-anticoagulation in a pig polytrauma model. This study also tested the hypothesis that the combined use of a low dose of prothrombin complex concentrate plus tranexamic acid and fibrinogen concentrate could improve its subtherapeutic effects.

Methods

Trauma (blunt liver injury and bilateral femur fractures) was induced in 48 anesthetized male pigs after 30 min of rivaroxaban infusion (1 mg/kg). Animals in the first part of the study received prothrombin complex concentrate (12.5, 25, and 50 U/kg). In the second part, animals were treated with 12.5 U/kg prothrombin complex concentrate plus tranexamic acid or plus tranexamic acid and fibrinogen concentrate. The primary endpoint was total blood loss postinjury. The secondary endpoints (panel of coagulation parameters and thrombin generation) were monitored for 240 min posttrauma or until death.

Results

The first part of the study showed that blood loss was significantly lower in the 25 U/kg prothrombin complex concentrate (1,541 ± 269 ml) and 50 U/kg prothrombin complex concentrate (1,464 ± 108 ml) compared with control (3,313 ± 634 ml), and 12.5 U/kg prothrombin complex concentrate (2,671 ± 334 ml, all P < 0.0001). In the second part of the study, blood loss was significantly less in the 12.5 U/kg prothrombin complex concentrate plus tranexamic acid and fibrinogen concentrate (1,836 ± 556 ml, P < 0.001) compared with 12.5 U/kg prothrombin complex concentrate plus tranexamic acid (2,910 ± 856 ml), and there were no early deaths in the 25 U/kg prothrombin complex concentrate, 50 U/kg prothrombin complex concentrate, and 12.5 U/kg prothrombin complex concentrate plus tranexamic acid and fibrinogen concentrate groups. Histopathologic analyses postmortem showed no adverse events.

Conclusions

Prothrombin complex concentrate effectively reduced blood loss, restored hemostasis, and balanced thrombin generation. A multimodal hemostatic approach using tranexamic acid plus fibrinogen concentrate enhanced the effect of low doses of prothrombin complex concentrate, potentially reducing the prothrombin complex concentrate doses required for effective bleeding control.

✦ Effect of Hypotension Prediction Index-guided intraoperative haemodynamic care on depth and duration of postoperative hypotension: a sub-study of the Hypotension Prediction trial

Background

Intraoperative and postoperative hypotension are associated with morbidity and mortality. The Hypotension Prediction (HYPE) trial showed that the Hypotension Prediction Index (HPI) reduced the depth and duration of intraoperative hypotension (IOH), without excess use of intravenous fluid, vasopressor, and/or inotropic therapies. We hypothesised that intraoperative HPI-guided haemodynamic care would reduce the severity of postoperative hypotension in the PACU.

Methods

This was a sub-study of the HYPE study, in which 60 adults undergoing elective noncardiac surgery were allocated randomly to intraoperative HPI-guided or standard haemodynamic care. Blood pressure was measured using a radial intra-arterial catheter, which was connected to a FloTracIQ sensor. Hypotension was defined as MAP <65 mm Hg, and a hypotensive event was defined as MAP <65 mm Hg for at least 1 min. The primary outcome was the time-weighted average (TWA) of postoperative hypotension. Secondary outcomes were absolute incidence, area under threshold for hypotension, and percentage of time spent with MAP <65 mm Hg.

Results

Overall, 54/60 (90%) subjects (age 64 (8) yr; 44% female) completed the protocol, owing to failure of the FloTracIQ device in 6/60 (10%) patients. Intraoperative HPI-guided care was used in 28 subjects; 26 subjects were randomised to the control group. Postoperative hypotension occurred in 37/54 (68%) subjects. HPI-guided care did not reduce the median duration (TWA) of postoperative hypotension (adjusted median difference, vs standard of care: 0.118; 95% confidence interval [CI], 0–0.332; P=0.112). HPI-guidance reduced the percentage of time with MAP <65 mm Hg by 4.9% (adjusted median difference: –4.9; 95% CI, –11.7 to –0.01; P=0.046).

Conclusions

Intraoperative HPI-guided haemodynamic care did not reduce the TWA of postoperative hypotension.

✦ Anaesthetic depth and delirium after major surgery: a randomised clinical trial

DOI:https://doi.org/10.1016/j.bja.2021.07.021

Background

Postoperative delirium is a serious complication of surgery associated with prolonged hospitalisation, long-term cognitive decline, and mortality. This study aimed to determine whether targeting bispectral index (BIS) readings of 50 (light anaesthesia) was associated with a lower incidence of POD than targeting BIS readings of 35 (deep anaesthesia).

Methods

This multicentre randomised clinical trial of 655 at-risk patients undergoing major surgery from eight centres in three countries assessed delirium for 5 days postoperatively using the 3 min confusion assessment method (3D-CAM) or CAM-ICU, and cognitive screening using the Mini-Mental State Examination at baseline and discharge and the Abbreviated Mental Test score (AMTS) at 30 days and 1 yr. Patients were assigned to light or deep anaesthesia. The primary outcome was the presence of postoperative delirium on any of the first 5 postoperative days. Secondary outcomes included mortality at 1 yr, cognitive decline at discharge, cognitive impairment at 30 days and 1 yr, unplanned ICU admission, length of stay, and time in electroencephalographic burst suppression.

Results

The incidence of postoperative delirium in the BIS 50 group was 19% and in the BIS 35 group was 28% (odds ratio 0.58 [95% confidence interval: 0.38–0.88]; P=0.010). At 1 yr, those in the BIS 50 group demonstrated significantly better cognitive function than those in the BIS 35 group (9% with AMTS ≤6 vs 20%; P<0.001).

Conclusions

Among patients undergoing major surgery, targeting light anaesthesia reduced the risk of postoperative delirium and cognitive impairment at 1 yr.

✦ Effect of positive end-expiratory pressure on lung injury and haemodynamics during experimental acute respiratory distress syndrome treated with extracorporeal membrane oxygenation and near-apnoeic ventilation

DOI: https://doi.org/10.1016/j.bja.2021.07.031

Background

Lung rest has been recommended during extracorporeal membrane oxygenation (ECMO) for severe acute respiratory distress syndrome (ARDS). Whether positive end-expiratory pressure (PEEP) confers lung protection during ECMO for severe ARDS is unclear. We compared the effects of three different PEEP levels whilst applying near-apnoeic ventilation in a model of severe ARDS treated with ECMO.

Methods

Acute respiratory distress syndrome was induced in anaesthetised adult male pigs by repeated saline lavage and injurious ventilation for 1.5 h. After ECMO was commenced, the pigs received standardised near-apnoeic ventilation for 24 h to maintain similar driving pressures and were randomly assigned to PEEP of 0, 10, or 20 cm H2O (n=7 per group). Respiratory and haemodynamic data were collected throughout the study. Histological injury was assessed by a pathologist masked to PEEP allocation. Lung oedema was estimated by wet-to-dry-weight ratio.

Results

All pigs developed severe ARDS. Oxygenation on ECMO improved with PEEP of 10 or 20 cm H2O, but did not in pigs allocated to PEEP of 0 cm H2O. Haemodynamic collapse refractory to norepinephrine (n=4) and early death (n=3) occurred after PEEP 20 cm H2O. The severity of lung injury was lowest after PEEP of 10 cm H2O in both dependent and non-dependent lung regions, compared with PEEP of 0 or 20 cm H2O. A higher wet-to-dry-weight ratio, indicating worse lung injury, was observed with PEEP of 0 cm H2O. Histological assessment suggested that lung injury was minimised with PEEP of 10 cm H2O.

Conclusions

During near-apnoeic ventilation and ECMO in experimental severe ARDS, 10 cm H2O PEEP minimised lung injury and improved gas exchange without compromising haemodynamic stability.

✦ Goal-directed fluid therapy in emergency abdominal surgery: a randomised multicentre trial

DOI:https://doi.org/10.1016/j.bja.2021.06.031

Background

More than 50% of patients have a major complication after emergency gastrointestinal surgery. Intravenous (i.v.) fluid therapy is a life-saving part of treatment, but evidence to guide what i.v. fluid strategy results in the best outcome is lacking. We hypothesised that goal-directed fluid therapy during surgery (GDT group) reduces the risk of major complications or death in patients undergoing major emergency gastrointestinal surgery compared with standard i.v. fluid therapy (STD group).

Methods

In a randomised, assessor-blinded, two-arm, multicentre trial, we included 312 adult patients with gastrointestinal obstruction or perforation. Patients in the GDT group received i.v. fluid to near-maximal stroke volume. Patients in the STD group received i.v. fluid following best clinical practice. Postoperative target was 0–2 L fluid balance. The primary outcome was a composite of major complications or death within 90 days. Secondary outcomes were time in intensive care, time on ventilator, time in dialysis, hospital stay, and minor complications.

Results

In a modified intention-to-treat analysis, we found no difference in the primary outcome between groups: 45 (30%) (GDT group) vs 39 (25%) (STD group) (odds ratio=1.24; 95% confidence interval, 0.75–2.05; P=0.40). Hospital stay was longer in the GDT group: median (inter-quartile range), 7 (4–12) vs 6 days (4–8.5) (P=0.04); no other differences were found.

Conclusion

Compared with pressure-guided i.v. fluid therapy (STD group), flow-guided fluid therapy to near-maximal stroke volume (GDT group) did not improve the outcome after surgery for bowel obstruction or gastrointestinal perforation but may have prolonged hospital stay.

✦ Delaying initiation of diuretics in critically ill patients with recent vasopressor use and high positive fluid balance

DOI:https://doi.org/10.1016/j.bja.2021.04.035

Background

Fluid overload is associated with poor outcomes. Clinicians might be reluctant to initiate diuretic therapy for patients with recent vasopressor use. We estimated the effect on 30-day mortality of withholding or delaying diuretics after vasopressor use in patients with probable fluid overload.

Methods

This was a retrospective cohort study of adults admitted to ICUs of an academic medical centre between 2008 and 2012. Using a database of time-stamped patient records, we followed individuals from the time they first required vasopressor support and had >5 L cumulative positive fluid balance (plus additional inclusion/exclusion criteria). We compared mortality under usual care (the mix of care actually delivered in the cohort) and treatment strategies restricting diuretic initiation during and for various durations after vasopressor use. We adjusted for baseline and time-varying confounding via inverse probability weighting.

Results

The study included 1501 patients, and the observed 30-day mortality rate was 11%. After adjusting for observed confounders, withholding diuretics for at least 24 h after stopping most recent vasopressor use was estimated to increase 30-day mortality rate by 2.2% (95% confidence interval [CI], 0.9–3.6%) compared with usual care. Data were consistent with moderate harm or slight benefit from withholding diuretic initiation only during concomitant vasopressor use; the estimated mortality rate increased by 0.5% (95% CI, –0.2% to 1.1%).

Conclusions

Withholding diuretic initiation after vasopressor use in patients with high cumulative positive balance (>5 L) was estimated to increase 30-day mortality. These findings are hypothesis generating and should be tested in a clinical trial.

✦ Effect of erector spinae plane block on the postoperative quality of recovery after laparoscopic cholecystectomy: a prospective double-blind study

DOI: https://doi.org/10.1016/j.bja.2021.06.030

Background

Laparoscopic cholecystectomy is a common surgical procedure that frequently results in substantial postoperative pain. Erector spinae plane block (ESPB) has been shown to have beneficial postoperative analgesic effects when used as a part of multimodal analgesia. The aim of this study was to determine whether ESPB improves postoperative recovery quality in patients undergoing laparoscopic cholecystectomy. Evaluation of the effects of ESPB on postoperative pain, opioid consumption, and nausea and vomiting was the secondary objective.

Methods

In this prospective double-blind study, 82 patients undergoing laparoscopic cholecystectomy were randomised into one of two groups: a standard multimodal analgesic regimen in Group N (control) or an ESPB was performed in Group E. Preoperative and postoperative recovery quality was measured using the 40-item quality of recovery (QoR-40) questionnaire; postoperative pain was evaluated using the numerical rating scale scores.

Results

Postoperative mean (standard deviation) QoR-40 scores were higher in Group E (181 [7.3]) than in Group N (167 [11.4]); P<0.01. With repeated measures, a significant effect of group and time was demonstrated for the global QoR-40 score, P<0.01, indicating better quality of recovery in Group E. Pain scores were significantly lower in Group E than in Group N, both during resting and motion at T1–T8 times (P<0.01 at each time). The total amount of tramadol consumed in the first 24 h was lower in Group E [median 0 mg, inter-quartile range (IQR) (0–140)], than in Group N [median 180 mg, IQR (150–240); P<0.01].

Conclusions

ESPB improved postoperative quality of recovery in patients undergoing laparoscopic cholecystectomy. Moreover, ESPB reduced pain scores and cumulative opioid consumption.

✦ The central nervous system during lung injury and mechanical ventilation: a narrative review

DOI:https://doi.org/10.1016/j.bja.2021.05.038

Mechanical ventilation induces a number of systemic responses for which the brain plays an essential role. During the last decade, substantial evidence has emerged showing that the brain modifies pulmonary responses to physical and biological stimuli by various mechanisms, including the modulation of neuroinflammatory reflexes and the onset of abnormal breathing patterns. Afferent signals and circulating factors from injured peripheral tissues, including the lung, can induce neuronal reprogramming, potentially contributing to neurocognitive dysfunction and psychological alterations seen in critically ill patients. These impairments are ubiquitous in the presence of positive pressure ventilation. This narrative review summarises current evidence of lung–brain crosstalk in patients receiving mechanical ventilation and describes the clinical implications of this crosstalk. Further, it proposes directions for future research ranging from identifying mechanisms of multiorgan failure to mitigating long-term sequelae after critical illness.

✦ Guidelines for the management of women with severe pre-eclampsia

DOI: 10.1016/j.accpm.2021.100901

Résumé article :

Objective

To provide national guidelines for the management of women with severe pre-eclampsia.

Design

A consensus committee of 26 experts was formed. A formal conflict-of-interest (COI) policy was developed at the onset of the process and enforced throughout. The entire guidelines process was conducted independently of any industrial funding. The authors were advised to follow the principles of the Grading of Recommendations Assessment, Development and Evaluation (GRADE®) system to guide assessment of quality of evidence. The potential drawbacks of making strong recommendations in the presence of low-quality evidence were emphasised.

Methods