LA BIBLIO DE L’AJAR: Printemps 2021

LA BIBLIO DE L’AJAR: Printemps 2021

L’intelligence artificielle fait peu à peu sa place dans la littérature ![]() Les KT peuvent-ils être laissés plus longtemps sans risque infectieux? Le mandrin en systématique réduit-il vraiment les échecs d’IOT en réa? Le SSH en continu 48h pour les trauma crâniens? L’acupuncture en complément des stratégies antalgiques postop?

Les KT peuvent-ils être laissés plus longtemps sans risque infectieux? Le mandrin en systématique réduit-il vraiment les échecs d’IOT en réa? Le SSH en continu 48h pour les trauma crâniens? L’acupuncture en complément des stratégies antalgiques postop? ![]()

Toutes les réponses dans cette biblio qui sent bon les fleurs et le soleil!

✦ Comparison of two delayed strategies for renal replacement therapy initiation for severe acute kidney injury (AKIKI 2): a multicentre, open-label, randomised, controlled trial

Référence : DOI: 10.1016/S0140-6736(21)00350-0

Délais avant mise en place d’une épuration extra-rénale dans l’insuffisance rénale aiguë en réanimation: y’a-t-il un intérêt à retarder jusqu’au dernier moment sa mise en place ? Probablement pas …

Résumé article :

Background Delaying renal replacement therapy (RRT) for some time in critically ill patients with severe acute kidney injury and no severe complication is safe and allows optimisation of the use of medical devices. Major uncertainty remains concerning the duration for which RRT can be postponed without risk. Our aim was to test the hypothesis that a more-delayed initiation strategy would result in more RRT-free days, compared with a delayed strategy.

Methods This was an unmasked, multicentre, prospective, open-label, randomised, controlled trial done in 39 intensive care units in France. We monitored critically ill patients with severe acute kidney injury (defined as Kidney Disease: Improving Global Outcomes stage 3) until they had oliguria for more than 72 h or a blood urea nitrogen concentration higher than 112 mg/dL. Patients were then randomly assigned (1:1) to either a strategy (delayed strategy) in which RRT was started just after randomisation or to a more-delayed strategy. With the more-delayed strategy, RRT initiation was postponed until mandatory indication (noticeable hyperkalaemia or metabolic acidosis or pulmonary oedema) or until blood urea nitrogen concentration reached 140 mg/dL. The primary outcome was the number of days alive and free of RRT between randomisation and day 28 and was done in the intention-to-treat population.

Findings Between May 7, 2018, and Oct 11, 2019, of 5336 patients assessed, 278 patients underwent randomisation; 137 were assigned to the delayed strategy and 141 to the more-delayed strategy. The number of complications potentially related to acute kidney injury or to RRT were similar between groups. The median number of RRT-free days was 12 days (IQR 0–25) in the delayed strategy and 10 days (IQR 0–24) in the more-delayed strategy (p=0·93). In a multivariable analysis, the hazard ratio for death at 60 days was 1·65 (95% CI 1·09–2·50, p=0·018) with the more-delayed versus the delayed strategy. The number of complications potentially related to acute kidney injury or renal replacement therapy did not differ between groups.

Interpretation In severe acute kidney injury patients with oliguria for more than 72 h or blood urea nitrogen concentration higher than 112 mg/dL and no severe complication that would mandate immediate RRT, longer postponing of RRT initiation did not confer additional benefit and was associated with potential harm.

✦ Azithromycin for community treatment of suspected COVID-19 in people at increased risk of an adverse clinical course in the UK (PRINCIPLE): a randomised, controlled, open-label, adaptive platform trial

Référence : DOI: 10.1016/S0140-6736(21)001

Intérêt de l’azithromycine dans la prise en charge du covid ? Non …

Résumé article :

Background Azithromycin, an antibiotic with potential antiviral and anti-inflammatory properties, has been used to treat COVID-19, but evidence from community randomised trials is lacking. We aimed to assess the effectiveness of azithromycin to treat suspected COVID-19 among people in the community who had an increased risk of complications.

Methods In this UK-based, primary care, open-label, multi-arm, adaptive platform randomised trial of interventions against COVID-19 in people at increased risk of an adverse clinical course (PRINCIPLE), we randomly assigned people aged 65 years and older, or 50 years and older with at least one comorbidity, who had been unwell for 14 days or less with suspected COVID-19, to usual care plus azithromycin 500 mg daily for three days, usual care plus other interventions, or usual care alone. The trial had two coprimary endpoints measured within 28 days from randomisation: time to first self-reported recovery, analysed using a Bayesian piecewise exponential, and hospital admission or death related to COVID-19, analysed using a Bayesian logistic regression model. Eligible participants with outcome data were included in the primary analysis, and those who received the allocated treatment were included in the safety analysis. The trial is registered with ISRCTN, ISRCTN86534580.

Findings The first participant was recruited to PRINCIPLE on April 2, 2020. The azithromycin group enrolled participants between May 22 and Nov 30, 2020, by which time 2265 participants had been randomly assigned, 540 to azithromycin plus usual care, 875 to usual care alone, and 850 to other interventions. 2120 (94%) of 2265 participants provided follow-up data and were included in the Bayesian primary analysis, 500 participants in the azithromycin plus usual care group, 823 in the usual care alone group, and 797 in other intervention groups. 402 (80%) of 500 participants in the azithromycin plus usual care group and 631 (77%) of 823 participants in the usual care alone group reported feeling recovered within 28 days. We found little evidence of a meaningful benefit in the azithromycin plus usual care group in time to first reported recovery versus usual care alone (hazard ratio 1·08, 95% Bayesian credibility interval [BCI] 0·95 to 1·23), equating to an estimated benefit in median time to first recovery of 0·94 days (95% BCI −0·56 to 2·43). The probability that there was a clinically meaningful benefit of at least 1·5 days in time to recovery was 0·23. 16 (3%) of 500 participants in the azithromycin plus usual care group and 28 (3%) of 823 participants in the usual care alone group were hospitalised (absolute benefit in percentage 0·3%, 95% BCI −1·7 to 2·2). There were no deaths in either study group. Safety outcomes were similar in both groups. Two (1%) of 455 participants in the azothromycin plus usual care group and four (1%) of 668 participants in the usual care alone group reported admission to hospital during the trial, not related to COVID-19.

Interpretation Our findings do not justify the routine use of azithromycin for reducing time to recovery or risk of hospitalisation for people with suspected COVID-19 in the community. These findings have important antibiotic stewardship implications during this pandemic, as inappropriate use of antibiotics leads to increased antimicrobial resistance, and there is evidence that azithromycin use increased during the pandemic in the UK.

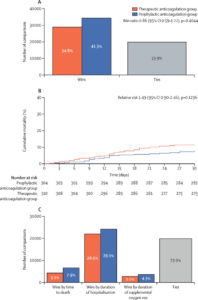

✦ Therapeutic versus prophylactic anticoagulation for patients admitted to hospital with COVID-19 and elevated D-dimer concentration (ACTION): an open-label, multicentre, randomised, controlled trial

Référence : DOI: 10.1016/S0140-6736(21)01203-4

Anticoagulants oraux directs pour la COVID-19

Background COVID-19 is associated with a prothrombotic state leading to adverse clinical outcomes. Whether therapeutic anticoagulation improves outcomes in patients hospitalised with COVID-19 is unknown. We aimed to compare the efficacy and safety of therapeutic versus prophylactic anticoagulation in this population.

Methods We did a pragmatic, open-label (with blinded adjudication), multicentre, randomised, controlled trial, at 31 sites in Brazil. Patients (aged ≥18 years) hospitalised with COVID-19 and elevated D-dimer concentration, and who had COVID-19 symptoms for up to 14 days before randomisation, were randomly assigned (1:1) to receive either therapeutic or prophylactic anticoagulation. Therapeutic anticoagulation was in-hospital oral rivaroxaban (20 mg or 15 mg daily) for stable patients, or initial subcutaneous enoxaparin (1 mg/kg twice per day) or intravenous unfractionated heparin (to achieve a 0·3–0·7 IU/mL anti-Xa concentration) for clinically unstable patients, followed by rivaroxaban to day 30. Prophylactic anticoagulation was standard in-hospital enoxaparin or unfractionated heparin. The primary efficacy outcome was a hierarchical analysis of time to death, duration of hospitalisation, or duration of supplemental oxygen to day 30, analysed with the win ratio method (a ratio >1 reflects a better outcome in the therapeutic anticoagulation group) in the intention-to-treat population. The primary safety outcome was major or clinically relevant non-major bleeding through 30 days. This study is registered with ClinicalTrials.gov (NCT04394377) and is completed.

Findings From June 24, 2020, to Feb 26, 2021, 3331 patients were screened and 615 were randomly allocated (311 [50%] to the therapeutic anticoagulation group and 304 [50%] to the prophylactic anticoagulation group). 576 (94%) were clinically stable and 39 (6%) clinically unstable. One patient, in the therapeutic group, was lost to follow-up because of withdrawal of consent and was not included in the primary analysis. The primary efficacy outcome was not different between patients assigned therapeutic or prophylactic anticoagulation, with 28 899 (34·8%) wins in the therapeutic group and 34 288 (41·3%) in the prophylactic group (win ratio 0·86 [95% CI 0·59–1·22], p=0·40). Consistent results were seen in clinically stable and clinically unstable patients. The primary safety outcome of major or clinically relevant non-major bleeding occurred in 26 (8%) patients assigned therapeutic anticoagulation and seven (2%) assigned prophylactic anticoagulation (relative risk 3·64 [95% CI 1·61–8·27], p=0·0010). Allergic reaction to the study medication occurred in two (1%) patients in the therapeutic anticoagulation group and three (1%) in the prophylactic anticoagulation group.

Interpretation In patients hospitalised with COVID-19 and elevated D-dimer concentration, in-hospital therapeutic anticoagulation with rivaroxaban or enoxaparin followed by rivaroxaban to day 30 did not improve clinical outcomes and increased bleeding compared with prophylactic anticoagulation. Therefore, use of therapeutic-dose rivaroxaban, and other direct oral anticoagulants, should be avoided in these patients in the absence of an evidence-based indication for oral anticoagulation.

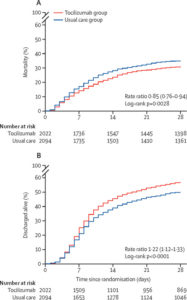

✦ Tocilizumab in patients admitted to hospital with COVID-19 (RECOVERY): a randomised, controlled, open-label, plastform trial

Référence : DOI: 10.1016/S0140-6736(21)00676-0

Intérêt du Tocilizumab pour les Covid grave → testé en pratique clinique et une fois de plus approuvé dans cette étude !

Background In this study, we aimed to evaluate the effects of tocilizumab in adult patients admitted to hospital with COVID-19 with both hypoxia and systemic inflammation.

Methods This randomised, controlled, open-label, platform trial (Randomised Evaluation of COVID-19 Therapy [RECOVERY]), is assessing several possible treatments in patients hospitalised with COVID-19 in the UK. Those trial participants with hypoxia (oxygen saturation <92% on air or requiring oxygen therapy) and evidence of systemic inflammation (C-reactive protein ≥75 mg/L) were eligible for random assignment in a 1:1 ratio to usual standard of care alone versus usual standard of care plus tocilizumab at a dose of 400 mg-800 mg (depending on weight) given intravenously. A second dose could be given 12-24 h later if the patient’s condition had not improved. The primary outcome was 28-day mortality, assessed in the intention-to-treat population. The trial is registered with ISRCTN (50189673) and ClinicalTrials.gov (NCT04381936).

Findings Between April 23, 2020, and Jan 24, 2021, 4116 adults of 21 550 patients enrolled into the RECOVERY trial were included in the assessment of tocilizumab, including 3385 (82%) patients receiving systemic corticosteroids. Overall, 621 (31%) of the 2022 patients allocated tocilizumab and 729 (35%) of the 2094 patients allocated to usual care died within 28 days (rate ratio 0·85; 95% CI 0·76-0·94; p=0·0028). Consistent results were seen in all prespecified subgroups of patients, including those receiving systemic corticosteroids. Patients allocated to tocilizumab were more likely to be discharged from hospital within 28 days (57% vs 50%; rate ratio 1·22; 1·12-1·33; p<0·0001). Among those not receiving invasive mechanical ventilation at baseline, patients allocated tocilizumab were less likely to reach the composite endpoint of invasive mechanical ventilation or death (35% vs 42%; risk ratio 0·84; 95% CI 0·77-0·92; p<0·0001).

Interpretation In hospitalised COVID-19 patients with hypoxia and systemic inflammation, tocilizumab improved survival and other clinical outcomes. These benefits were seen regardless of the amount of respiratory support and were additional to the benefits of systemic corticosteroids.

✦ Effect of infusion set replacement intervals on catheter-related bloodstream infections (RSVP): a randomised, controlled, equivalence (central venous access device)–non-inferiority (peripheral arterial catheter) trial

Référence : DOI: 10.1016/S0140-6736(21)00351-2

Remplacement des cathéter pour prévenir les infections: 4 jours VS 7 jours

Background The optimal duration of infusion set use to prevent life-threatening catheter-related bloodstream infection (CRBSI) is unclear. We aimed to compare the effectiveness and costs of 7-day (intervention) versus 4-day (control) infusion set replacement to prevent CRBSI in patients with central venous access devices (tunnelled cuffed, non-tunnelled, peripherally inserted, and totally implanted) and peripheral arterial catheters.

Methods We did a randomised, controlled, assessor-masked trial at ten Australian hospitals. Our hypothesis was CRBSI equivalence for central venous access devices and non-inferiority for peripheral arterial catheters (both 2% margin). Adults and children with expected greater than 24 h central venous access device-peripheral arterial catheter use were randomly assigned (1:1; stratified by hospital, catheter type, and intensive care unit or ward) by a centralised, web-based service (concealed before allocation) to infusion set replacement every 7 days, or 4 days. This included crystalloids, non-lipid parenteral nutrition, and medication infusions. Patients and clinicians were not masked, but the primary outcome (CRBSI) was adjudicated by masked infectious diseases physicians. The analysis was modified intention to treat (mITT).

Findings Between May 30, 2011, and Dec, 9, 2016, from 6007 patients assessed, we assigned 2944 patients to 7-day (n=1463) or 4-day (n=1481) infusion set replacement, with 2941 in the mITT analysis. For central venous access devices, 20 (1·78%) of 1124 patients (7-day group) and 16 (1·46%) of 1097 patients (4-day group) had CRBSI (absolute risk difference [ARD] 0·32%, 95% CI -0·73 to 1·37). For peripheral arterial catheters, one (0·28%) of 357 patients in the 7-day group and none of 363 patients in the 4-day group had CRBSI (ARD 0·28%, -0·27% to 0·83%). There were no treatment-related adverse events.

Interpretation Infusion set use can be safely extended to 7 days with resultant cost and workload reductions.

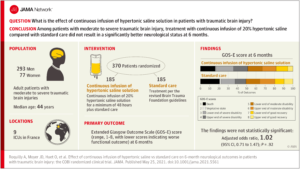

✦ Effect of Continuous Infusion of Hypertonic Saline vs Standard Care on 6-Month Neurological Outcomes in Patients With Traumatic Brain Injury

The COBI Randomized Clinical Trial

Référence : DOI: 10.1001/jama.2021.5561

Absence d’effet du sérum salé hypertonique chez les traumatisés crâniens

Importance Fluid therapy is an important component of care for patients with traumatic brain injury, but whether it modulates clinical outcomes remains unclear.

Objective To determine whether continuous infusion of hypertonic saline solution improves neurological outcome at 6 months in patients with traumatic brain injury.

Design, Setting, and Participants Multicenter randomized clinical trial conducted in 9 intensive care units in France, including 370 patients with moderate to severe traumatic brain injury who were recruited from October 2017 to August 2019. Follow-up was completed in February 2020.

Interventions Adult patients with moderate to severe traumatic brain injury were randomly assigned to receive continuous infusion of 20% hypertonic saline solution plus standard care (n = 185) or standard care alone (controls; n = 185). The 20% hypertonic saline solution was administered for 48 hours or longer if patients remained at risk of intracranial hypertension.

Main Outcomes and Measures The primary outcome was Extended Glasgow Outcome Scale (GOS-E) score (range, 1-8, with lower scores indicating worse functional outcome) at 6 months, obtained centrally by blinded assessors and analyzed with ordinal logistic regression adjusted for prespecified prognostic factors (with a common odds ratio [OR] >1.0 favoring intervention). There were 12 secondary outcomes measured at multiple time points, including development of intracranial hypertension and 6-month mortality.

Results Among 370 patients who were randomized (median age, 44 [interquartile range, 27-59] years; 77 [20.2%] women), 359 (97%) completed the trial. The adjusted common OR for the GOS-E score at 6 months was 1.02 (95% CI, 0.71-1.47; P = .92). Of the 12 secondary outcomes, 10 were not significantly different. Intracranial hypertension developed in 62 (33.7%) patients in the intervention group and 66 (36.3%) patients in the control group (absolute difference, −2.6% [95% CI, −12.3% to 7.2%]; OR, 0.80 [95% CI, 0.51-1.26]). There was no significant difference in 6-month mortality (29 [15.9%] in the intervention group vs 37 [20.8%] in the control group; absolute difference, −4.9% [95% CI, −12.8% to 3.1%]; hazard ratio, 0.79 [95% CI, 0.48-1.28]).

Conclusions and Relevance Among patients with moderate to severe traumatic brain injury, treatment with continuous infusion of 20% hypertonic saline compared with standard care did not result in a significantly better neurological status at 6 months. However, confidence intervals for the findings were wide, and the study may have had limited power to detect a clinically important difference.

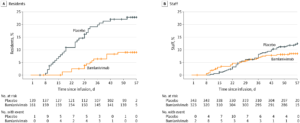

✦ Effect of Bamlanivimab vs Placebo on Incidence of COVID-19 Among Residents and Staff of Skilled Nursing and Assisted Living FacilitiesA Randomized Clinical Trial

Référence : DOI: 10.1001/jama.2021.8828

Effets d’une monothérapie anti-SARS-CoV-2 par Bamlanivimab

Importance Preventive interventions are needed to protect residents and staff of skilled nursing and assisted living facilities from COVID-19 during outbreaks in their facilities. Bamlanivimab, a neutralizing monoclonal antibody against SARS-CoV-2, may confer rapid protection from SARS-CoV-2 infection and COVID-19.

Objective To determine the effect of bamlanivimab on the incidence of COVID-19 among residents and staff of skilled nursing and assisted living facilities.

Design, Setting, and Participants Randomized, double-blind, single-dose, phase 3 trial that enrolled residents and staff of 74 skilled nursing and assisted living facilities in the United States with at least 1 confirmed SARS-CoV-2 index case. A total of 1175 participants enrolled in the study from August 2 to November 20, 2020. Database lock was triggered on January 13, 2021, when all participants reached study day 57.

Interventions Participants were randomized to receive a single intravenous infusion of bamlanivimab, 4200 mg (n = 588), or placebo (n = 587).

Main Outcomes and Measures The primary outcome was incidence of COVID-19, defined as the detection of SARS-CoV-2 by reverse transcriptase–polymerase chain reaction and mild or worse disease severity within 21 days of detection, within 8 weeks of randomization. Key secondary outcomes included incidence of moderate or worse COVID-19 severity and incidence of SARS-CoV-2 infection.

Results The prevention population comprised a total of 966 participants (666 staff and 300 residents) who were negative at baseline for SARS-CoV-2 infection and serology (mean age, 53.0 [range, 18-104] years; 722 [74.7%] women). Bamlanivimab significantly reduced the incidence of COVID-19 in the prevention population compared with placebo (8.5% vs 15.2%; odds ratio, 0.43 [95% CI, 0.28-0.68]; P < .001; absolute risk difference, −6.6 [95% CI, −10.7 to −2.6] percentage points). Five deaths attributed to COVID-19 were reported by day 57; all occurred in the placebo group. Among 1175 participants who received study product (safety population), the rate of participants with adverse events was 20.1% in the bamlanivimab group and 18.9% in the placebo group. The most common adverse events were urinary tract infection (reported by 12 participants [2%] who received bamlanivimab and 14 [2.4%] who received placebo) and hypertension (reported by 7 participants [1.2%] who received bamlanivimab and 10 [1.7%] who received placebo).

Conclusions and Relevance Among residents and staff in skilled nursing and assisted living facilities, treatment during August-November 2020 with bamlanivimab monotherapy reduced the incidence of COVID-19 infection. Further research is needed to assess preventive efficacy with current patterns of viral strains with combination monoclonal antibody therapy.

✦ Artificial intelligence–enabled electrocardiograms for identification of patients with low ejection fraction: a pragmatic, randomized clinical trial

Référence : DOI: s41591-021-01335-4

Les auteurs ont ici étudié la capacité d’une analyse d’ECG par l’intelligence artificielle afin de dépister une FEVG altérée, ce qui mènerait à une ETT/ETO plus précoce et donc une prise en charge thérapeutique plus précocoe.

L’une des limites de cet étude est que, malgré l’efficience de l’usage d’une IA afin de dépister une FEVG altérée sur les ECG, un examen facilement disponible et accessible, les auteurs ne se sont pas intéressés à la variation des outcomes “durs” tels la mortalité ou la morbidité lorsqu’on dépiste une FEVG altérée avec une IA analysant un ECG

Résumé article :

We have conducted a pragmatic clinical trial aimed to assess whether an electrocardiogram (ECG)-based, artificial intelligence (AI)-powered clinical decision support tool enables early diagnosis of low ejection fraction (EF), a condition that is underdiagnosed but treatable. In this trial (NCT04000087), 120 primary care teams from 45 clinics or hospitals were cluster-randomized to either the intervention arm (access to AI results; 181 clinicians) or the control arm (usual care; 177 clinicians). ECGs were obtained as part of routine care from a total of 22,641 adults (N = 11,573 intervention; N = 11,068 control) without prior heart failure. The primary outcome was a new diagnosis of low EF (≤50%) within 90 days of the ECG.

The trial met the prespecified primary endpoint, demonstrating that the intervention increased the diagnosis of low EF in the overall cohort (1.6% in the control arm versus 2.1% in the intervention arm, odds ratio (OR) 1.32 (1.01–1.61), P = 0.007) and among those who were identified as having a high likelihood of low EF (that is, positive AI-ECG, 6% of the overall cohort) (14.5% in the control arm versus 19.5% in the intervention arm, OR 1.43 (1.08–1.91), P = 0.01). In the overall cohort, echocardiogram utilization was similar between the two arms (18.2% control versus 19.2% intervention, P = 0.17); for patients with positive AI-ECGs, more echocardiograms were obtained in the intervention compared to the control arm (38.1% control versus 49.6% intervention, P < 0.001).

These results indicate that use of an AI algorithm based on ECGs can enable the early diagnosis of low EF in patients in the setting of routine primary care.

LES 100% ANESTHÉSIE :

✦ Intravenous versus Volatile Anesthetic Effects on Postoperative Cognition in Elderly Patients Undergoing Laparoscopic Abdominal Surgery: A Multicenter, Randomized Trial

Référence : DOI: 10.1097/ALN.0000000000003680

Background Delayed neurocognitive recovery after surgery is associated with poor outcome. Most surgeries require general anesthesia, of which sevoflurane and propofol are the most commonly used inhalational and intravenous anesthetics. The authors tested the primary hypothesis that patients with laparoscopic abdominal surgery under propofol-based anesthesia have a lower incidence of delayed neurocognitive recovery than patients under sevoflurane-based anesthesia. A second hypothesis is that there were blood biomarkers for predicting delayed neurocognitive recovery to occur.

Methods A randomized, double-blind, parallel, controlled study was performed at four hospitals in China. Elderly patients (60 yr and older) undergoing laparoscopic abdominal surgery that was likely longer than 2 h were randomized to a propofol- or sevoflurane-based regimen to maintain general anesthesia. A minimum of 221 patients was planned for each group to detect a one-third decrease in delayed neurocognitive recovery incidence in propofol group compared with sevoflurane group. The primary outcome was delayed neurocognitive recovery incidence 5 to 7 days after surgery.

Results A total of 544 patients were enrolled, with 272 patients in each group. Of these patients, 226 in the propofol group and 221 in the sevoflurane group completed the needed neuropsychological tests for diagnosing delayed neurocognitive recovery, and 46 (20.8%) in the sevoflurane group and 38 (16.8%) in the propofol group met the criteria for delayed neurocognitive recovery (odds ratio, 0.77; 95% CI, 0.48 to 1.24; P = 0.279). A high blood interleukin-6 concentration at 1 h after skin incision was associated with an increased likelihood of delayed neurocognitive recovery (odds ratio, 1.04; 95% CI, 1.01 to 1.07; P = 0.007). Adverse event incidences were similar in both groups.

Conclusions Anesthetic choice between propofol and sevoflurane did not appear to affect the incidence of delayed neurocognitive recovery 5 to 7 days after laparoscopic abdominal surgery. A high blood interleukin-6 concentration after surgical incision may be an independent risk factor for delayed neurocognitive recovery.

✦ Restrictive Transfusion Strategy after Cardiac Surgery: Role of Central Venous Oxygen Saturation Trigger: A Randomized Controlled Trial

Référence : DOI: 10.1097/ALN.0000000000003682

Background Recent guidelines on transfusion in cardiac surgery suggest that hemoglobin might not be the only criterion to trigger transfusion. Central venous oxygen saturation (Svo2), which is related to the balance between tissue oxygen delivery and consumption, may help the decision process of transfusion. We designed a randomized study to test whether central Svo2–guided transfusion could reduce transfusion incidence after cardiac surgery.

Methods This single center, single-blinded, randomized controlled trial was conducted on adult patients after cardiac surgery in the intensive care unit (ICU) of a tertiary university hospital. Patients were screened preoperatively and were assigned randomly to two study groups (control or Svo2) if they developed anemia (hemoglobin less than 9 g/dl), without active bleeding, during their ICU stay. Patients were transfused at each anemia episode during their ICU stay except the Svo2patients who were transfused only if the pretransfusion central Svo2 was less than or equal to 65%. The primary outcome was the proportion of patients transfused in the ICU. The main secondary endpoints were (1) number of erythrocyte units transfused in the ICU and at study discharge, and (2) the proportion of patients transfused at study discharge.

Results Among 484 screened patients, 100 were randomized, with 50 in each group. All control patients were transfused in the ICU with a total of 94 transfused erythrocyte units. In the Svo2 group, 34 (68%) patients were transfused (odds ratio, 0.031 [95% CI, 0 to 0.153]; P < 0.001 vs. controls), with a total of 65 erythrocyte units. At study discharge, eight patients of the Svo2 group remained nontransfused and the cumulative count of erythrocyte units was 96 in the Svo2 group and 126 in the control group.

Conclusions A restrictive transfusion strategy adjusted with central Svo2 may allow a significant reduction in the incidence of transfusion.

✦ Determination of the ED95 of a single bolus dose of dexmedetomidine for adequate sedation in obese or nonobese children and adolescents

Référence : DOI: 10.1016/j.bja.2020.11.037

Posologie du Dexdor dans l’anesthésie de l’enfant obèse et non obèse.

Résumé article :

Background With the increasing prevalence of children who are overweight and with obesity, anaesthesiologists must determine the optimal dosing of medications given the altered pharmacokinetics and pharmacodynamics in this population. We therefore determined the single dose of dexmedetomidine that provided sufficient sedation in 95% (ED95) of children with and without obesity as measured by a minimum Ramsay sedation score (RSS) of 4.

Methods Forty children with obesity (BMI >95th percentile for age and gender) and 40 children with normal weight (BMI 25th–84th percentile), aged 3–17 yr, ASA physical status 1–2, undergoing elective surgery, were recruited. The biased coin design was used to determine the target dose. Positive responses were defined as achievement of adequate sedation (RSS ≥4). The initial dose for both groups was dexmedetomidine 0.3 μg kg−1i.v. infusion for 10 min. An increment or decrement of 0.1 μg kg−1 was used depending on the responses. Isotonic regression and bootstrapping methods were used to determine the ED95and 95% confidence intervals (CIs), respectively.

Results The ED95 of dexmedetomidine for adequate sedation in children with obesity was 0.75 μg kg−1 with 95% CI of 0.638–0.780 μg kg−1, overlapping the CI of the ED95 estimate of 0.74 μg kg−1(95% CI: 0.598–0.779 μg kg−1) for their normal-weight peers.

Conclusions The ED95 values of dexmedetomidine administered over 10 min were 0.75 and 0.74 μg kg−1 in paediatric subjects with and without obesity, respectively, based on total body weight.

✦ Multicentre randomised controlled clinical trial of electroacupuncture with usual care for patients with non-acute pain after back surgery

Référence : DOI: 10.1016/j.bja.2020.10.038

Article intéressant sur l’intérêt de l’acupuncture en complément des traitements antalgiques classique en post-op !!

Résumé article :

Background The purpose of this study was to investigate the effectiveness and safety between electroacupuncture (EA) combined with usual care (UC) and UC alone for pain reduction and functional improvement in patients with non-acute low back pain (LBP) after back surgery.

Methods In this multicentre, randomised, assessor-blinded active-controlled trial, 108 participants were equally randomised to either the EA with UC or the UC alone. Participants in the EA with UC group received EA treatment and UC treatment twice a week for 4 weeks; those allocated to the UC group received only UC. The primary outcome was the VAS pain intensity score. The secondary outcomes were functional improvement (Oswestry Disability Index [ODI]) and the quality of life (EuroQol-5-dimension questionnaire [EQ-5D]). The outcomes were measured at Week 5.

Results Significant reductions were observed in the VAS (mean difference [MD] –8.15; P=0.0311) and ODI scores (MD –3.98; P=0.0460) between two groups after 4 weeks of treatment. No meaningful differences were found in the EQ-5D scores and incidence of adverse events (AEs) between the groups. The reported AEs did not have a causal relationship with EA treatment.

Conclusions The results showed that EA with UC treatment was more effective than UC alone and relatively safe in patients with non-acute LBP after back surgery. EA with UC treatment may be considered as an effective, integrated, conservative treatment for patients with non-acute LBP after back surgery.

✦ Perioperative use of physostigmine to reduce opioid consumption and peri-incisional hyperalgesia: a randomised controlled trial

Référence : DOI: 10.1016/j.bja.2020.10.039

Étude intéressante sur les rôles potentiels de la Physostigmine dans la prise en charge de la douleur péri-opératoire. A priori pas d’intérêt sur la quantité d’opioïdes consommés en post-op, mais possible rôle anti-hyperalgique !

Résumé article :

Background Several studies have shown that cholinergic mechanisms play a pivotal role in the anti-nociceptive system by acting synergistically with morphine and reducing postoperative opioid consumption. In addition, the anti-cholinesterase drug physostigmine that increases synaptic acetylcholine concentrations has anti-inflammatory effects.

Methods In this randomised placebo-controlled trial including 110 patients undergoing nephrectomy, we evaluated the effects of intraoperative physostigmine 0.5 mg h−1 i.v. for 24 h on opioid consumption, hyperalgesia, pain scores, and satisfaction with pain control.

Results Physostigmine infusion did not affect opioid consumption compared with placebo. However, the mechanical pain threshold was significantly higher (2.3 [sd 0.3]) vs 2.2 [0.4]; P=0.0491), and the distance from the suture line of hyperalgesia (5.9 [3.3] vs 8.5 [4.6]; P=0.006), wind-up ratios (2.2 [1.5] vs 3.1 [1.5]; P=0.0389), and minimum and maximum postoperative pain scores at 24 h (minimum 1.8 [1.0] vs 2.4 [1.2]; P=0.0451; and maximum 3.2 [1.4] vs 4.2 [1.4]; P=0.0081) and 48 h (minimum 0.9 [1.0] vs 1.6 [1.1]; P=0.0101; and maximum 2.0 [1.5] vs 3.2 [1.6]; P=0.0029) were lower in the study group. Pain Disability Index was lower and satisfaction with pain control was higher after 3 months in the physostigmine group.

Conclusions In contrast to previous trials, physostigmine did not reduce opioid consumption. As pain thresholds were higher and hyperalgesia and wind-up lower in the physostigmine group, we conclude that physostigmine has anti-hyperalgesic effects and attenuates sensitisation processes. Intraoperative physostigmine may be a useful and safe addition to conventional postoperative pain control.

✦ Relationship of perioperative anaphylaxis to neuromuscular blocking agents, obesity, and pholcodine consumption: a case-control study

Référence : DOI: 10.1016/j.bja.2020.12.018

FdR de réaction anaphylactique aux curare

Background The observation that patients presenting for bariatric surgery had a high incidence of neuromuscular blocking agent (NMBA) anaphylaxis prompted this restricted case-control study to test the hypothesis that obesity is a risk factor for NMBA anaphylaxis, independent of differences in pholcodine consumption.

Methods We compared 145 patients diagnosed with intraoperative NMBA anaphylaxis in Western Australia between 2012 and 2020 with 61 patients with cefazolin anaphylaxis with respect to BMI grade, history of pholcodine consumption, sex, age, comorbid disease, and NMBA type and dose. Confounding was assessed by stratification and binomial logistic regression.

Results Obesity (odds ratio [OR]=2.96, χ2=11.7, P=0.001), ‘definite’ pholcodine consumption (OR=14.0, χ2=2.6, P<0.001), and female sex (OR=2.70, χ2=9.61, P=0.002) were statistically significant risk factors for NMBA anaphylaxis on univariate analysis. The risk of NMBA anaphylaxis increased with BMI grade. Confounding analysis indicated that both obesity and pholcodine consumption remained important risk factors after correction for confounding, but that sex did not. The relative rate of rocuronium anaphylaxis was estimated to be 3.0 times that of vecuronium using controls as an estimate of market share, and the risk of NMBA anaphylaxis in patients presenting for bariatric surgery was 8.8 times the expected rate (74.9 vs 8.5 per 100 000 anaesthetic procedures).

Conclusions Obesity is a risk factor for NMBA anaphylaxis, the risk increasing with BMI grade. Pholcodine consumption is also a risk factor, and this is consistent with the pholcodine hypothesis. Rocuronium use is associated with an increased risk of anaphylaxis compared with vecuronium in this population.

✦ Bosutinib reduces endothelial permeability and organ failure in a rat polytrauma transfusion model

Référence : DOI: 10.1016/j.bja.2021.01.032

Intérêt du bosutinib dans la prise en charge initiale d’un polytraumatisé. Première étude sur modèle animal semble prometteur …

Résumé article :

Background Trauma-induced shock is associated with endothelial dysfunction. We examined whether the tyrosine kinase inhibitor bosutinib as an adjunct therapy to a balanced blood component resuscitation strategy reduces trauma-induced endothelial permeability, thereby improving shock reversal and limiting transfusion requirements and organ failure in a rat polytrauma transfusion model.

Methods Male Sprague–Dawley rats (n=13 per group) were traumatised and exsanguinated until a MAP of 40 mm Hg was reached, then randomised to two groups: red blood cells, plasma and platelets in a 1:1:1 ratio with either bosutinib or vehicle. Controls were randomised to sham (median laparotomy, no trauma) with bosutinib or vehicle. Organs were harvested for histology and wet/dry (W/D) weight ratio.

Results Traumatic injury resulted in shock, with higher lactate levels compared with controls. In trauma-induced shock, the resuscitation volume needed to obtain a MAP of 60 mm Hg was lower in bosutinib-treated animals (2.8 [2.7–3.2] ml kg−1) compared with vehicle (6.1 [5.1–7.2] ml kg−1, P<0.001). Lactate levels in the bosutinib group were 2.9 [1.7–4.8] mM compared with 6.2 [3.1–14.1] mM in the vehicle group (P=0.06). Bosutinib compared with vehicle reduced lung vascular leakage (W/D ratio of 5.1 [4.6–5.3] vs 5.7 [5.4–6.0] (P=0.046) and lung injury scores (P=0.027).

Conclusions Bosutinib as an adjunct therapy to a balanced transfusion strategy reduced resuscitation volume, improved shock reversal, and reduced vascular leak and organ injury in a rat polytrauma model.

✦ Association between ionised calcium and severity of postpartum haemorrhage: a retrospective cohort study

Référence : DOI: 10.1016/j.bja.2020.11.020

Intérêt de la calcémie au diagnostic de l’HPP pour prévoir les patients les plus à risque de saignement sévère.

Background Postpartum haemorrhage (PPH) is often complicated by impaired coagulation. We aimed to determine whether the level of ionised calcium (Ca2+), an essential coagulation co-factor, at diagnosis of PPH is associated with bleeding severity.

Methods This was a retrospective cohort study of women diagnosed with PPH during vaginal delivery between January 2009 and April 2020. Ca2+ levels at PPH diagnosis were compared between women who progressed to severe PPH (primary outcome) and those with less severe bleeding. Severe PPH was defined by transfusion of ≥2 blood units, arterial embolisation or emergency surgery, admission to ICU, or death. Associations between other variables (e.g. fibrinogen concentration) and bleeding severity were also assessed.

Results For 436 patients included in the analysis, hypocalcaemia was more common among patients with severe PPH (51.5% vs 10.6%, P<0.001). In a multivariable logistic regression model, Ca2+ and fibrinogen were the only parameters independently associated with PPH severity with odds ratios of 1.14 for each 10 mg dl-1 decrease in fibrinogen (95% confidence interval [CI], 1.05-1.24; P=0.002) and 1.97 for each 0.1 mmol L-1 decrease in Ca2+ (95% CI, 1.25-3.1; P=0.003). The performance of Ca2+ or fibrinogen was not significantly different (area under the curve [AUC]=0.79 [95% CI, 0.75-0.83] vs AUC=0.86 [95% CI, 0.82-0.9]; P=0.09). The addition of Ca2+ to fibrinogen improved the model, leading to AUC of 0.9 (95% CI, 0.86-0.93), P=0.03.

Conclusions Ca2+ level at the time of diagnosis of PPH was associated with risk of severe bleeding. Ca2+ monitoring may facilitate identification and treatment of high-risk patients.

LES 100% RÉANIMATION :

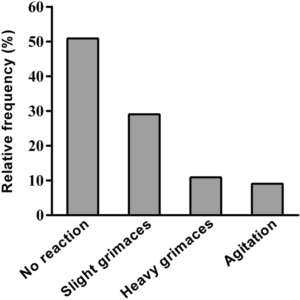

✦ Pain and dyspnea control during awake fiberoptic bronchoscopy in critically ill patients: safety and efficacy of remifentanil target-controlled infusion

Référence : DOI: 10.1186/s13613-021-00832-6

La fibro bronchique chez un patient intubé, c’est facile … Mais bien plus complexe quand le patient est éveillé non intubé. Ici, les auteurs évaluent la possibilité de réaliser la fibroscopie bronchique en sédation vigile par AIVOC de remifentanil.

(jugement sur la réussite ou non de la procédure, puis critères secondaire sur le confort/douleur).

Bon, pour les anesthésistes réanimateurs (qui font de l’AIVOC rémifentanil pour diverses procédures en sédation vigile) cet article issu des réanimateurs médicaux, découvrant l’AIVOC de remifentanil, est … wigolo #anesthDoncRéa

Résumé article :

Purpose Flexible fiberoptic bronchoscopy is frequently used in intensive care unit, but is a source of discomfort, dyspnea and anxiety for patients. Our objective was to assess the feasibility and tolerance of a sedation using remifentanil target-controlled infusion, to perform fiberoptic bronchoscopy in awake ICU patients.

Materials, patients and methods This monocentric, prospective observational study was conducted in awake patients requiring fiberoptic bronchoscopy. In accordance with usual practices in our center, remifentanil target-controlled infusion was used under close monitoring and adapted to the patient’s reactions. The primary objective was the rate of successful procedures without additional analgesia or anesthesia. The secondary objectives were clinical tolerance and the comfort of patients (graded from “very uncomfortable” to “very comfortable”) and operators (numeric scale from 0 to 10) during the procedure.

Results From May 2014 to December 2015, 72 patients were included. Most of them (69%) were hypoxemic and admitted for acute respiratory failure. No additional medication was needed in 96% of the patients. No severe side-effects occurred. Seventy-eight percent of patients described the procedure as “comfortable or very comfortable”. Physicians rated their comfort with a median [IQR] score of 9 [8–10].

Conclusion Remifentanil target-controlled infusion administered to perform awake fiberoptic bronchoscopy in critically ill patients is feasible without requirement of additional analgesics or sedative drugs. Clinical tolerance as well as patients’ and operators’ comfort were good to excellent. This technique could benefit patients’ experience.

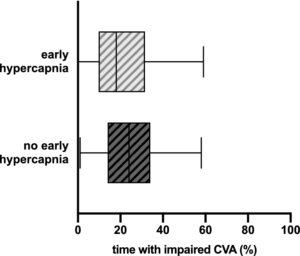

✦ Cerebrovascular autoregulation and arterial carbon dioxide in patients with acute respiratory distress syndrome: a prospective observational cohort study

Référence : DOI: 10.1186/s13613-021-00831-7

Encore moi qui vous bassine avec la capnie haha (cf. article sur l’ECCO2R et la décarboxylation), mais cette fois ci, une étude de physiopath observationelle sur l’hypercapnie (et hypocapnie) et ses effets sur l’autorégulation du débit cérébral, très intéressante, et surtout qui explique pourquoi, par exemple, sur un SDRA avec une ECLS, on ne balaye jamais au débit maximum de gaz d’emblée (quelque soit la FiO2 délivrée à l’ECLS) pour éviter l’hypocapnie iatrogène.

Résumé article :

Background Early hypercapnia is common in patients with acute respiratory distress syndrome (ARDS) and is associated with increased mortality. Fluctuations of carbon dioxide have been associated with adverse neurological outcome in patients with severe respiratory failure requiring extracorporeal organ support. The aim of this study was to investigate whether early hypercapnia is associated with impaired cerebrovascular autoregulation during the acute phase of ARDS.

Methods Between December 2018 and November 2019, patients who fulfilled the Berlin criteria for ARDS, were enrolled. Patients with a history of central nervous system disorders, cerebrovascular disease, chronic hypercapnia, or a life expectancy of less than 24 h were excluded from study participation. During the acute phase of ARDS, cerebrovascular autoregulation was measured over two time periods for at least 60 min. Based on the values of mean arterial blood pressure and near-infrared spectroscopy, a cerebral autoregulation index (COx) was calculated. The time with impaired cerebral autoregulation was calculated for each measurement and was compared between patients with and without early hypercapnia [defined as an arterial partial pressure of carbon dioxide (PaCO2) ≥ 50 mmHg with a corresponding arterial pH < 7.35 within the first 24 h of ARDS diagnosis].

Results Of 66 patients included, 117 monitoring episodes were available. The mean age of the study population was 58.5 ± 16 years. 10 patients (15.2%) had mild, 28 (42.4%) moderate, and 28 (42.4%) severe ARDS. Nineteen patients (28.8%) required extracorporeal membrane oxygenation. Early hypercapnia was present in 39 patients (59.1%). Multivariable analysis did not show a significant association between early hypercapnia and impaired cerebrovascular autoregulation (B = 0.023 [95% CI − 0.054; 0.100], p = 0.556). Hypocapnia during the monitoring period was significantly associated with impaired cerebrovascular autoregulation [B = 0.155 (95% CI 0.014; 0.296), p = 0.032].

Early hypercapnia and cerebrovascular autoregulation. Shows the time with impaired cerebrovascular autoregulation (CVA; in % of the total monitoring time) in ARDS patients with early hypercapnia vs. no early hypercapnia. The COx was depicted as a moving linear correlation based on mean arterial pressure and cerebral oxygenation (ICM+, Cambridge Enterprise, Cambridge, UK). Impaired cerebrovascular autoregulation was defined as a COx > 0.3. ARDS Acute respiratory distress syndrome, COx cerebral oxygenation index. Data are presented as median (boxes) with Tukey whiskers

Conclusion Our results suggest that moderate permissive hypercapnia during the acute phase of ARDS has no adverse effect on cerebrovascular autoregulation and may be tolerated to a certain extent to achieve low tidal volumes. In contrast, episodes of hypocapnia may compromise cerebral blood flow regulation.

✦ Virtual reality vs. Kalinox® for management of pain in intensive care unit after cardiac surgery: a randomized study

Référence : DOI: 10.1186/s13613-021-00866-w

De nouvelles modalités de traitement de la douleur se développent, donc l’utilisation de la réalité virtuelle (VR). Cette modalité permet une “distraction” du patient en le plaçant dans un environnement virtuel prédéfini. Ici, les auteurs ont fait une étude de non infériorité comparant la VR au MEOPA pour le retrait des drains chirurgicaux post chirurgie cardiaque.

De mon point de vue de geek, les techniques utilisées ne sont pas forcément les plus optimales sur la technologie de la VR, qui reste à développer.

Résumé article :

Introduction The management of pain and anxiety remains a challenge in the intensive care unit. By distracting patients, virtual reality (VR) may have a role in painful procedures. We compared VR vs. an inhaled equimolar mixture of N2O and O2 (Kalinox®) for pain and anxiety management during the removal of chest drains after cardiac surgery.

Methods Prospective, non-inferiority, open-label study. Patients were randomized, for Kalinox® or VR session during drain removal. The analgesia/nociception index (ANI) was monitored during the procedure for objective assessment of pain and anxiety. The primary endpoint was the ΔANI (ANImin − ANI0) during the procedure, based on ANIm (average on 4 min). We prespecified VR as non-inferior to Kalinox® with a margin of 3 points. Self-reported pain and anxiety were also analysed using numeric rate scale (NRS).

Results 200 patients were included, 99 in the VR group and 101 in the Kalinox® group; 90 patients were analysed in both groups in per-protocol analysis. The median age was 68.0 years [60.0–74.8]. The ΔANI was − 15.1 ± 12.9 in the Kalinox® group and − 15.7 ± 11.6 in the VR group (NS). The mean difference was, therefore, − 0.6 [− 3.6 to 2.4], including the non-inferiority margin of 3. Patients in the VR group had a significantly higher pain NRS scale immediately after the drain removal, 5.0 [3.0–7.0] vs. 3.0 [2.0–6.0], p = 0.009, but no difference 10 min after. NRS of anxiety did not differ between the two groups.

Conclusion Based on the ANI, the current study showed that VR did not reach the statistical requirements for a proven non-inferiority vs. Kalinox® in managing pain and anxiety during chest drain removal. Moreover, VR was less effective based on NRS. More studies are needed to determine if VR might have a place in the overall approach to pain and anxiety in intensive care units.

✦ Mental health and stress among ICU healthcare professionals in France according to intensity of the COVID-19 epidemic

Référence : DOI: 10.1186/s13613-021-00880-y

Etude intéressante quantifiant l’état psychiatrique et le stress perçu lors de la 1ère vague de COVID-19. Le plus intéressant, c’est que les auteurs étayent également les facteurs socio-démographiques (et autres) associés à cette dégradation de l’état psychique et du stress ressenti.

Résumé article :

Background We investigated the impact of the COVID-19 crisis on mental health of professionals working in the intensive care unit (ICU) according to the intensity of the epidemic in France.

Methods This cross-sectional survey was conducted in 77 French hospitals from April 22 to May 13 2020. All ICU frontline healthcare workers were eligible. The primary endpoint was the mental health, assessed using the 12-item General Health Questionnaire. Sources of stress during the crisis were assessed using the Perceived Stressors in Intensive Care Units (PS-ICU) scale. Epidemic intensity was defined as high or low for each region based on publicly available data from Santé Publique France. Effects were assessed using linear mixed models, moderation and mediation analyses.

Results In total, 2643 health professionals participated; 64.36% in high-intensity zones. Professionals in areas with greater epidemic intensity were at higher risk of mental health issues (p < 0.001), and higher levels of overall perceived stress (p < 0.001), compared to low-intensity zones. Factors associated with higher overall perceived stress were female sex (B = 0.13; 95% confidence interval [CI] = 0.08–0.17), having a relative at risk of COVID-19 (B = 0.14; 95%-CI = 0.09–0.18) and working in high-intensity zones (B = 0.11; 95%-CI = 0.02–0.20). Perceived stress mediated the impact of the crisis context on mental health (B = 0.23, 95%-CI = 0.05, 0.41) and the impact of stress on mental health was moderated by positive thinking, b = − 0.32, 95% CI = − 0.54, − 0.11.

Conclusion COVID-19 negatively impacted the mental health of ICU professionals. Professionals working in zones where the epidemic was of high intensity were significantly more affected, with higher levels of perceived stress. This study is supported by a grant from the French Ministry of Health (PHRC-COVID 2020).

✦ Individualization of PEEP and tidal volume in ARDS patients with electrical impedance tomography: a pilot feasibility study

Référence : DOI: 10.1186/s13613-021-00877-7

Dans le cadre du SDRA, il est préférable d’adapter la ventilation mécanique à chaque patient, par le biais prinicpalement d’une épreuve de PEEP ajustée dans une balance entre barotraumatisme et gain d’oxygénation. Cependant, sans accès direct à la ventilation “réele” du patient, il est difficile de juger la recrutabilité du patient, qui peut ne pas être montrée par l’épreuve de PEEP successives.

En utilisant la tomographie par impédance électrique (EIT), une technique spécialisée qui permet de voir les zones ventilées, distendues ou recrutables, les auteurs ont ici étudié la faisabilité d’individualiser la PEEP sur la ventilation du parenchyme (au lieu de la juger sur les pressions et l’oxygénation).

Résumé article :

Background In mechanically ventilated patients with acute respiratory distress syndrome (ARDS), electrical impedance tomography (EIT) provides information on alveolar cycling and overdistension as well as assessment of recruitability at the bedside. We developed a protocol for individualization of positive end-expiratory pressure (PEEP) and tidal volume (VT) utilizing EIT-derived information on recruitability, overdistension and alveolar cycling. The aim of this study was to assess whether the EIT-based protocol allows individualization of ventilator settings without causing lung overdistension, and to evaluate its effects on respiratory system compliance, oxygenation and alveolar cycling.

Methods 20 patients with ARDS were included. Initially, patients were ventilated according to the recommendations of the ARDS Network with a VT of 6 ml per kg predicted body weight and PEEP adjusted according to the lower PEEP/FiO2 table. Subsequently, ventilator settings were adjusted according to the EIT-based protocol once every 30 min for a duration of 4 h. To assess global overdistension, we determined whether lung stress and strain remained below 27 mbar and 2.0, respectively.

Results Prospective optimization of mechanical ventilation with EIT led to higher PEEP levels (16.5 [14–18] mbar vs. 10 [8–10] mbar before optimization; p = 0.0001) and similar VT (5.7 ± 0.92 ml/kg vs. 5.8 ± 0.47 ml/kg before optimization; p = 0.96). Global lung stress remained below 27 mbar in all patients and global strain below 2.0 in 19 out of 20 patients. Compliance remained similar, while oxygenation was significantly improved and alveolar cycling was reduced after EIT-based optimization.

Conclusions Adjustment of PEEP and VT using the EIT-based protocol led to individualization of ventilator settings with improved oxygenation and reduced alveolar cycling without promoting global overdistension.

✦ Effect of the use of an endotracheal tube and stylet versus an endotracheal tube alone on first-attempt intubation success: a multicentre, randomised clinical trial in 999 patients

Référence (doi) : 10.1007%2Fs00134-021-06417-y

Utilisation de mandrin dès la 1ère tentative d’IOT

Purpose The effect of the routine use of a stylet during tracheal intubation on first-attempt intubation success is unclear. We hypothesised that the first-attempt intubation success rate would be higher with tracheal tube + stylet than with tracheal tube alone.

Methods In this multicentre randomised controlled trial, conducted in 32 intensive care units, we randomly assigned patients to tracheal tube + stylet or tracheal tube alone (i.e. without stylet). The primary outcome was the proportion of patients with first-attempt intubation success. The secondary outcome was the proportion of patients with complications related to tracheal intubation. Serious adverse events, i.e., traumatic injuries related to tracheal intubation, were evaluated.

Results A total of 999 patients were included in the modified intention-to-treat analysis: 501 (50%) to tracheal tube + stylet and 498 (50%) to tracheal tube alone. First-attempt intubation success occurred in 392 patients (78.2%) in the tracheal tube + stylet group and in 356 (71.5%) in the tracheal tube alone group (absolute risk difference, 6.7; 95%CI 1.4–12.1; relative risk, 1.10; 95%CI 1.02–1.18; P = 0.01). A total of 194 patients (38.7%) in the tracheal tube + stylet group had complications related to tracheal intubation, as compared with 200 patients (40.2%) in the tracheal tube alone group (absolute risk difference, − 1.5; 95%CI − 7.5 to 4.6; relative risk, 0.96; 95%CI 0.83–1.12; P = 0.64). The incidence of serious adverse events was 4.0% and 3.6%, respectively (absolute risk difference, 0.4; 95%CI, − 2.0 to 2.8; relative risk, 1.10; 95%CI 0.59–2.06. P = 0.76).

Conclusions Among critically ill adults undergoing tracheal intubation, using a stylet improves first-attempt intubation success.

✦ High Pleural Pressure Prevents Alveolar Overdistension and Hemodynamic Collapse in Acute Respiratory Distress Syndrome with Class III Obesity. A Clinical Trial

Référence : DOI: 10.1164/rccm.201909-1687OC

Résumé article :

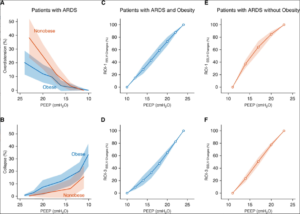

Rationale Obesity is characterized by elevated pleural pressure (Ppl) and worsening atelectasis during mechanical ventilation in patients with acute respiratory distress syndrome (ARDS).

Objectives To determine the effects of a lung recruitment maneuver (LRM) in the presence of elevated Ppl on hemodynamics, left and right ventricular pressure, and pulmonary vascular resistance. We hypothesized that elevated Ppl protects the cardiovascular system against high airway pressure and prevents lung overdistension.

Methods First, an interventional crossover trial in adult subjects with ARDS and a body mass index ≥ 35 kg/m2 (n = 21) was performed to explore the hemodynamic consequences of the LRM. Second, cardiovascular function was studied during low and high positive end-expiratory pressure (PEEP) in a model of swine with ARDS and high Ppl (n = 9) versus healthy swine with normal Ppl (n = 6).

Measurements and Main Results Subjects with ARDS and obesity (body mass index = 57 ± 12 kg/m2) after LRM required an increase in PEEP of 8 (95% confidence interval [95% CI], 7–10) cm H2O above traditional ARDS Network settings to improve lung function, oxygenation and V/Q matching, without impairment of hemodynamics or right heart function. ARDS swine with high Ppl demonstrated unchanged transmural left ventricular pressure and systemic blood pressure after the LRM protocol. Pulmonary arterial hypertension decreased (8 [95% CI, 13–4] mm Hg), as did vascular resistance (1.5 [95% CI, 2.2–0.9] Wood units) and transmural right ventricular pressure (10 [95% CI, 15–6] mm Hg) during exhalation. LRM and PEEP decreased pulmonary vascular resistance and normalized the V/Q ratio.

Clinical study of patients with acute respiratory distress syndrome (ARDS) and class III obesity versus patients with ARDS without obesity. Overdistension and collapse during a similar sequence of positive end-expiratory pressure (PEEP) and regional pressure–volume (P–V) curves for the most nondependent and the most dependent regions of interest (ROIs) are shown. (A) Overdistension and (B) collapse measured by using electrical impedance tomography in patients with ARDS and class III obesity versus patients with ARDS without obesity are shown. A mixed linear model was used for overdistension (P = 0.002 for interaction) and collapse (P < 0.001 for interaction), and for similar PEEP, overdistension was higher in patients with ARDS and without obesity and collapse was higher in patients with ARDS and class III obesity. Regional P–V curves were built for the most non–gravity-dependent ROI (ROI-1) and the most dependent ROI (ROI-3) (see Figure E1). The regional variations in EELV were calculated by using electrical impedance tomography for each PEEP (see online supplement). ROI-1 are shown in (C) patients with ARDS and class III obesity and in (E) patients with ARDS without obesity. Of note, for similar PEEP values, the P–V curve shape was different: in patients with ARDS and class III obesity, it was linear, and in patients with ARDS and without obesity, it showed positive exponential growth (mixed linear model, P = 0.002 for interaction). ROI-3 are shown in (D) patients with ARDS and class III obesity and in (F) patients with ARDS without obesity. Again, for similar PEEPs, P–V curve shapes were different. In patient with ARDS and class III obesity, the curve showed exponential negative decay, whereas in patients with ARDS without obesity, it was linear (mixed linear model, P = 0.001 for interaction). Data are presented as the mean ± SD (confidence interval). EELV = end-expiratory lung volume.

Conclusions High airway pressure is required to recruit lung atelectasis in patients with ARDS and class III obesity but causes minimal overdistension. In addition, patients with ARDS and class III obesity hemodynamically tolerate LRM with high airway pressure.

✦ Inflammation and Coagulation during Critical Illness and Long-Term Cognitive Impairment and Disability

Référence : DOI: 10.1164/rccm.201912-2449OC

Résumé article :

Rationale The biological mechanisms of long-term cognitive impairment and disability after critical illness are unclear.

Objectives To test the hypothesis that markers of acute inflammation and coagulation are associated with subsequent long-term cognitive impairment and disability.

Methods We obtained plasma samples from adults with respiratory failure or shock on Study Days 1, 3, and 5 and measured concentrations of CRP (C-reactive protein), IFN-γ, IL-1β, IL-6, IL-8, IL-10, IL-12, MMP-9 (matrix metalloproteinase-9), TNF-α (tumor necrosis factor-α), soluble TNF receptor 1, and protein C. At 3 and 12 months after discharge, we assessed global cognition, executive function, and activities of daily living. We analyzed associations between markers and outcomes using multivariable regression, adjusting for age, sex, education, comorbidities, baseline cognition, doses of sedatives and opioids, stroke risk (in cognitive models), and baseline disability scores (in disability models).

Measurements and Main Results We included 548 participants who were a median (interquartile range) of 62 (53–72) years old, 88% of whom were mechanically ventilated, and who had an enrollment Sequential Organ Failure Assessment score of 9 (7–11). After adjusting for covariates, no markers were associated with long-term cognitive function. Two markers, CRP and MMP-9, were associated with greater disability in basic and instrumental activities of daily living at 3 and 12 months. No other markers were consistently associated with disability outcomes.

Conclusions Markers of systemic inflammation and coagulation measured early during critical illness are not associated with long-term cognitive outcomes and demonstrate inconsistent associations with disability outcomes. Future studies that pair longitudinal measurement of inflammation and related pathways throughout the course of critical illness and during recovery with long-term outcomes are needed.

✦ Role of Positive End-Expiratory Pressure and Regional Transpulmonary Pressure in Asymmetrical Lung Injury

Référence : https://doi.org/10.1164/rccm.202005-1556OC

Rationale Asymmetrical lung injury is a frequent clinical presentation. Regional distribution of Vt and positive end-expiratory pressure (PEEP) could result in hyperinflation of the less-injured lung. The validity of esophageal pressure (Pes) is unknown.

Objectives To compare, in asymmetrical lung injury, Pes with directly measured pleural pressures (Ppl) of both sides and investigate how PEEP impacts ventilation distribution and the regional driving transpulmonary pressure (inspiratory – expiratory).

Methods Fourteen mechanically ventilated pigs with lung injury were studied. One lung was blocked while the contralateral one underwent surfactant lavage and injurious ventilation. Airway pressure and Pes were measured, as was Ppl in the dorsal and ventral pleural space adjacent to each lung. Distribution of ventilation was assessed by electrical impedance tomography. PEEP was studied through decremental steps.

Measurements and Results Ventral and dorsal Ppl were similar between the injured and the noninjured lung across all PEEP levels. Dorsal Ppl and Pes were similar. The driving transpulmonary pressure was similar in the two lungs. Vt distribution between lungs was different at zero end-expiratory pressure (≈70% of Vt going in noninjured lung) owing to different respiratory system compliance (8.3 ml/cm H2O noninjured lung vs. 3.7 ml/cm H2O injured lung). PEEP at 10 cm H2O with transpulmonary pressure around zero homogenized Vtdistribution opening the lungs. PEEP ≥16 cm H2O equalized distribution of Vt but with overdistension for both lungs.

Conclusions Despite asymmetrical lung injury, Ppl between injured and noninjured lungs is equalized and esophageal pressure is a reliable estimate of dorsal Ppl. Driving transpulmonary pressure is similar for both lungs. Vt distribution results from regional respiratory system compliance. Moderate PEEP homogenizes Vt distribution between lungs without generating hyperinflation.

✦ Association Between Troponin I Levels During Sepsis and Post-Sepsis Cardiovascular Complications

Référence : https://doi.org/10.1164/rccm.202103-0613OC

Impact de l’élévation de troponine per et post-sepsis

Rationale Sepsis commonly results in elevated serum troponin I levels and increased risk for post-sepsis cardiovascular complications; however, the association between troponin I level during sepsis and cardiovascular complications after sepsis is unclear. Objectives: To evaluate the association between serum troponin levels during sepsis and 1-year post-sepsis cardiovascular risks.

Methods We included patients aged >40 years without a prior diagnosis of cardiovascular disease within 5-years, admitted with sepsis across 21 hospitals from 2011 to 2017. Peak serum troponin I levels during sepsis were grouped as normal (<0.04ng/mL) or tertiles of abnormal (>0.04 to <0.09ng/mL, >0.09 to <0.42ng/mL, or >0.42ng/mL). Multivariable adjusted, cause-specific, Cox proportional hazards models that treated death as a competing risk were used to assess associations between peak sepsis troponin I levels and a composite cardiovascular outcome (atherosclerotic cardiovascular disease, atrial fibrillation, and heart failure) in the year following sepsis. Models were adjusted for pre-sepsis and intra-sepsis factors considered potential confounders.

Measurements and Main Results Among 14,046 patients with troponin I measured during sepsis, 2,012 (14.3%) patients experienced the composite cardiovascular outcome in the year following sepsis hospitalization. Compared with patients with normal troponin levels, those with elevated troponins had increased risks of adverse cardiovascular events (adjusted Hazard Ratiotroponin 0.04-0.09=1.37 (95% CI 1.20-1.55), aHRtroponin 0.09-0.42=1.44 (95% CI 1.27-1.63), and aHRtroponin > 0.42=1.77 (95% CI 1.56-2.00)).

Conclusions Among patients without pre-existing cardiovascular disease, troponin elevation during sepsis identified patients at increased risk for post-sepsis cardiovascular complications. Strategies to mitigate cardiovascular complications among this high-risk subset of patients is warranted.

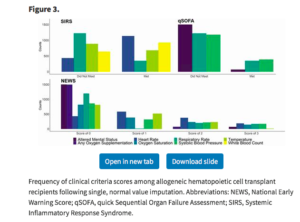

✦ Early Bacterial Identification Among Intubated Patients with COVID-19 or Influenza Pneumonia: A European Multicenter Comparative Cohort Study

Référence : https://doi.org/10.1164/rccm.202101-0030OC

Moins de surinfections bactériennes avec la COVID-19 vs la grippe.

Rationale Early empirical antimicrobial treatment is frequently prescribed to critically ill patients with COVID-19, based on Surviving Sepsis Campaign guidelines. Objective: We aimed to determine the prevalence of early bacterial identification in intubated patients with SARS-CoV-2 pneumonia, as compared to influenza pneumonia, and to characterize its microbiology and impact on outcomes.

Methods Multicenter retrospective European cohort performed in 36 ICUs. All adult patients receiving invasive mechanical ventilation >48h were eligible if they had SARS-CoV-2 or influenza pneumonia at ICU admission. Bacterial identification was defined by a positive bacterial culture, within 48h after intubation, in endotracheal aspirates, bronchoalveolar lavage, blood cultures, or a positive pneumococcal or legionella urinary antigen test.

Measurements and Main Results 1,050 patients were included (568 in SARS-CoV-2 and 482 in influenza groups). The prevalence of bacterial identification was significantly lower in patients with SARS-CoV-2 pneumonia as compared to patients with influenza pneumonia (9.7 vs 33.6%, unadjusted odds ratio (OR) 0.21 (95% confidence interval (CI) 0.15 to 0.30), adjusted OR 0.23 (95% CI 0.16 to 0.33), p<0.0001). Gram-positive cocci were responsible for 58% and 72% of co-infection in patients with SARS-CoV-2 and influenza pneumonia, respectively. Bacterial identification was associated with increased adjusted hazard ratio for 28-day mortality in patients with SARS-CoV-2 pneumonia (1.57 (95% CI 1.01 to 2.44), p=0.043). However, no significant difference was found in heterogeneity of outcomes related to bacterial identification between the two study groups, suggesting that the impact of co-infection on mortality was not different between SARS-CoV-2 and influenza patients.

Conclusions Bacterial identification within 48h after intubation is significantly less frequent in patients with SARS-CoV-2 pneumonia as compared to patients with influenza pneumonia.

✦ Effect of Lowering Vt on Mortality in Acute Respiratory Distress Syndrome Varies with Respiratory System Elastance

Référence : https://doi.org/10.1164/rccm.202009-3536OC

Ventilation adaptée à la compliance et non au Vt seul.

Rationale If the risk of ventilator-induced lung injury in acute respiratory distress syndrome (ARDS) is causally determined by driving pressure rather than by Vt, then the effect of ventilation with lower Vt on mortality would be predicted to vary according to respiratory system elastance (Ers).

Objectives To determine whether the mortality benefit of ventilation with lower Vt varies according to Ers.

Methods: In a secondary analysis of patients from five randomized trials of lower- versus higher-Vt ventilation strategies in ARDS and acute hypoxemic respiratory failure, the posterior probability of an interaction between the randomized Vt strategy and Ers on 60-day mortality was computed using Bayesian multivariable logistic regression.

Measurements and Main Results Of 1,096 patients available for analysis, 416 (38%) died by Day 60. The posterior probability that the mortality benefit from lower-Vt ventilation strategies varied with Ers was 93% (posterior median interaction odds ratio, 0.80 per cm H2O/[ml/kg]; 90% credible interval, 0.63–1.02). Ers was classified as low (<2 cm H2O/[ml/kg], n = 321, 32%), intermediate (2–3 cm H2O/[ml/kg], n = 475, 46%), and high (>3 cm H2O/[ml/kg], n = 224, 22%). In these groups, the posterior probabilities of an absolute risk reduction in mortality ≥ 1% were 55%, 82%, and 92%, respectively. The posterior probabilities of an absolute risk reduction ≥ 5% were 29%, 58%, and 82%, respectively.

Conclusions The mortality benefit of ventilation with lower Vt in ARDS varies according to elastance, suggesting that lung-protective ventilation strategies should primarily target driving pressure rather than Vt.

✦ COVID-19 ARDS is characterized by higher extravascular lung water than non-COVID-19 ARDS: the PiCCOVID study

Référence : https://ccforum.biomedcentral.com/articles/10.1186/s13054-021-03594-6

Caractérisation et différences sur le profil du SDRA lié à la COVID-19 par PiCCO

Background In acute respiratory distress syndrome (ARDS), extravascular lung water index (EVLWi) and pulmonary vascular permeability index (PVPI) measured by transpulmonary thermodilution reflect the degree of lung injury. Whether EVLWi and PVPI are different between non-COVID-19 ARDS and the ARDS due to COVID-19 has never been reported. We aimed at comparing EVLWi, PVPI, respiratory mechanics and hemodynamics in patients with COVID-19 ARDS vs. ARDS of other origin.

Methods Between March and October 2020, in an observational study conducted in intensive care units from three university hospitals, 60 patients with COVID-19-related ARDS monitored by transpulmonary thermodilution were compared to the 60 consecutive non-COVID-19 ARDS admitted immediately before the COVID-19 outbreak between December 2018 and February 2020.

Results Driving pressure was similar between patients with COVID-19 and non-COVID-19 ARDS, at baseline as well as during the study period. Compared to patients without COVID-19, those with COVID-19 exhibited higher EVLWi, both at the baseline (17 (14–21) vs. 15 (11–19) mL/kg, respectively, p = 0.03) and at the time of its maximal value (24 (18–27) vs. 21 (15–24) mL/kg, respectively, p = 0.01). Similar results were observed for PVPI. In COVID-19 patients, the worst ratio between arterial oxygen partial pressure over oxygen inspired fraction was lower (81 (70–109) vs. 100 (80–124) mmHg, respectively, p = 0.02) and prone positioning and extracorporeal membrane oxygenation (ECMO) were more frequently used than in patients without COVID-19. COVID-19 patients had lower maximal lactate level and maximal norepinephrine dose than patients without COVID-19. Day-60 mortality was similar between groups (57% vs. 65%, respectively, p = 0.45). The maximal value of EVLWi and PVPI remained independently associated with outcome in the whole cohort.

Conclusion Compared to ARDS patients without COVID-19, patients with COVID-19 had similar lung mechanics, but higher EVLWi and PVPI values from the beginning of the disease. This was associated with worse oxygenation and with more requirement of prone positioning and ECMO. This is compatible with the specific lung inflammation and severe diffuse alveolar damage related to COVID-19. By contrast, patients with COVID-19 had fewer hemodynamic derangement. Eventually, mortality was similar between groups.

DU CÔTÉ DES AUTRES SPÉCIALITÉS :

✦ Perioperative Neurological Evaluation and Management to Lower the Risk of Acute Stroke in Patients Undergoing Noncardiac, Nonneurological Surgery: A Scientific Statement From the American Heart Association/American Stroke Association

Référence : DOI: 10.1161/CIR.0000000000000968

Perioperative stroke is a potentially devastating complication in patients undergoing noncardiac, nonneurological surgery. This scientific statement summarizes established risk factors for perioperative stroke, preoperative and intraoperative strategies to mitigate the risk of stroke, suggestions for postoperative assessments, and treatment approaches for minimizing permanent neurological dysfunction in patients who experience a perioperative stroke. The first section focuses on preoperative optimization, including the role of preoperative carotid revascularization in patients with high-grade carotid stenosis and delaying surgery in patients with recent strokes. The second section reviews intraoperative strategies to reduce the risk of stroke, focusing on blood pressure control, perioperative goal-directed therapy, blood transfusion, and anesthetic technique. Finally, this statement presents strategies for the evaluation and treatment of patients with suspected postoperative strokes and, in particular, highlights the value of rapid recognition of strokes and the early use of intravenous thrombolysis and mechanical embolectomy in appropriate patients.

✦ Comparative Effectiveness of Heart Rate Control Medications for the Treatment of Sepsis-Associated Atrial Fibrillation

Référence : DOI: 10.1016/j.chest.2020.10.049

Étude rétrospective comparant les différentes molécules ayant pour objectif de ralentir la fréquence cardiaque chez les patients septiques en FA (< 110 bpm). 4 classes sont comparées, à noter que dans la classe béta bloquant, deux molécules étaient utilisées : le metoprolol et l’esmolol (propriétés différentes, voie d’administration différente). Même si les BB permettent un meilleur contrôle de la fréquence cardiaque dans l’heure d’administration, à la sixième heure le contrôle est similaire entre BB et amiodarone. Il faut interpréter ces résultats avec prudence en raison d’un déséquilibre entre les groupes à l’administration du traitement (plus sévère dans le groupe amiodarone). Pour mémoire, il existe des recommandations récentes de l’ESC (2020) concernant le management d’une FA. Ces recommandations ne prennent malheureusement que très peu en compte le patient de réanimation.

Background Atrial fibrillation (AF) with rapid ventricular response frequently complicates the management of critically ill patients with sepsis and may necessitate the initiation of medication to avoid hemodynamic compromise. However, the optimal medication to achieve rate control for AF with rapid ventricular response in sepsis is unclear.

Research Question What is the comparative effectiveness of frequently used AF medications (β-blockers, calcium channel blockers, amiodarone, and digoxin) on heart rate (HR) reduction among critically ill patients with sepsis and AF with rapid ventricular response?

Study Design and Methods We conducted a multicenter retrospective cohort study among patients with sepsis and AF with rapid ventricular response (HR > 110 beats/min). We compared the rate control effectiveness of β-blockers to calcium channel blockers, amiodarone, and digoxin using multivariate-adjusted, time-varying exposures in competing risk models (for death and addition of another AF medication), adjusting for fixed and time-varying confounders.

Results Among 666 included patients, 50.6% initially received amiodarone, 10.1% received a β-blocker, 33.8% received a calcium channel blocker, and 5.6% received digoxin. The adjusted hazard ratio for HR of < 110 beats/min by 1 h was 0.50 (95% CI, 0.34-0.74) for amiodarone vs β-blocker, 0.37 (95% CI, 0.18-0.77) for digoxin vs β-blocker, and 0.75 (95% CI, 0.51-1.11) for calcium channel blocker vs β-blocker. By 6 h, the adjusted hazard ratio for HR < 110 beats/min was 0.67 (95% CI, 0.47-0.97) for amiodarone vs β-blocker, 0.60 (95% CI, 0.36-1.004) for digoxin vs β-blocker, and 1.03 (95% CI, 0.71-1.49) for calcium channel blocker vs β-blocker.

Interpretation In a large cohort of patients with sepsis and AF with rapid ventricular response, a β-blocker treatment strategy was associated with improved HR control at 1 h, but generally similar HR control at 6 h compared with amiodarone, calcium channel blocker, or digoxin.

✦ Aspiration Risk Factors, Microbiology, and Empiric Antibiotics for Patients Hospitalized With Community-Acquired Pneumonia

Reférence : DOI: 10.1016/j.chest.2020.06.079

Discussion sur l’intérêt d’adjoindre une couverture des anaérobies dans la pneumopathie aigue communautaire avec inhalation ou facteurs de risque d’inhalation. Une prescription très fréquente mais peut être pas justifiée.